It’s difficult to imagine a world where you couldn’t order groceries, check your bank account, read the news, listen to music or watch your favorite show from the comfort of your smartphone. Perhaps even harder to fathom is that some of these services only hit the mainstream in the last 10-20 years. The world we live in today is a stark contrast to the world of 20 years ago. Banks no longer deliver value by holding gold bullion in vaults, but by providing fast, secure, frictionless online trading. Retailers no longer retain customers by having a store in every town, but by bringing superior customer service with extensive choice and a slick tailored user experience. Video rental shops are a thing of the past, replaced by addictive convenient media streaming services such as Netflix. The list goes on.

At the beating heart of this digital convenience is software. A successful software organization can delight customers with superior user experience, can engage its market to determine demand, and can anticipate change – such as regulatory. It also has the means to respond quickly to any risk: security threats, economic flux or competitive threat. In summary, companies are rediscovering their competitive advantage through software and data.

Delight, Engage, Anticipate, Respond

At the beating heart of this digital convenience is software. A successful software organization can delight customers with superior user experience, can engage its market to determine demand, and can anticipate change – such as regulatory. It also has the means to respond quickly to any risk: security threats, economic flux or competitive threat. In summary, companies are rediscovering their competitive advantage through software and data.

How to build good software? Contrary to popular belief, it’s more than just spinning up some microservices. Good software relies on several core pillars being present: abiding by lean management principles; harmonizing Dev and Ops to foster a DevOps culture; employing continuous delivery practices (such as fast iterations, small teams and version control); building software using modern architectures such as microservices; and last but not least, utilizing cloud operating models. Each year, the highly regarded State Of DevOps Report sees continued evidence that delivering software quickly, reliably and safely – based on the pillars mentioned above – contributes to organizational performance (profitability, productivity and customer satisfaction).

Per the title, this blog series intends to focus on the cloud pillar. In the context of software innovation, cloud not only provides the Enterprise with agility, elasticity and on-demand self-service but also – if done right — the potential for cost optimization. Cost optimization is paramount to unlocking continued investment in innovation, and when it comes to cloud design, there should be no doubt: architecture matters.

Application First

How should an organisation define its cloud strategy? Public cloud? Private cloud? Multi-cloud? I’d argue instead for an application first strategy. Applications are an organization’s lifeblood, and an application first strategy is a more practical approach that will direct applications to their most optimal destination. This will then naturally shape any cloud choice. An app first strategy looks to classify applications and map out their lifecycle, allowing organisations to place applications on optimal landing zones – across private, public and edge resources – based on the application’s business value, specific characteristics and any relevant organizational factors.

Ultimately seeking affirmation whether to invest in, tolerate or decommission an application, companies can use application classification methodologies to categorize applications. Such categorization determines where (if any) change needs to happen. Change can happen at these three layers:

◉ Application Layer

◉ Platform Layer

◉ Infrastructure Layer

The most substantial lift, but one with the potential for the most business value, is a change to application code itself, ranging from a complete re-write, to materially altering of code, to the optimization of existing code. For applications which don’t merit source code change, perhaps the value lies in evolving the platform layer. This re-platforming to a new runtime (from virtualized to containerized for example) can unlock efficiencies not possible on the incumbent platform. In the case of applications where the transformation of application code or platform layer may want to be avoided at all costs, modernization of the infrastructure layer could make the most sense, reducing risk, complexity, and TCO. Lastly, the decommissioning of applications at the end of their natural lifecycle is very much a critical piece of this jigsaw. After all, if nothing is ever decommissioned, no savings are made. This combination of re-platforming applications, modernizing infrastructure and decommissioning applications is crucial in freeing up investment for software innovation.

Landing Zones

Where an application ultimately lands depends on its own unique characteristics and any relevant organisational factors. Characteristics include the application’s performance profile, security needs, compliance requirements and any specific dependencies to other services. These diverse requirements across an Enterprise’s application estate, give rise to the concept of multi-cloud Landing Zones across Private, Public and Edge locations.

Cloud Chaos

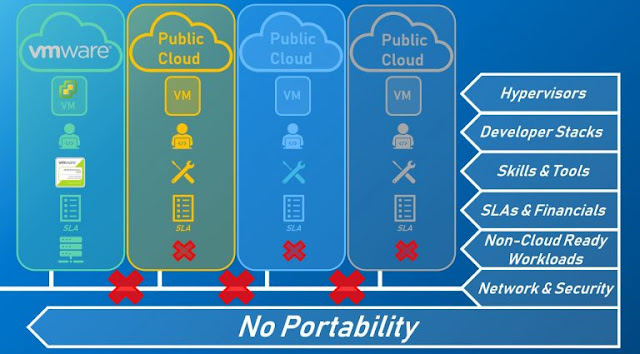

Due to this need for landing zones, the industry has begun to standardize on a multi-cloud approach — and rightly so. Every application is different, and a multi-cloud model permits access to best-of-breed services across all clouds. Unfortunately, the multi-cloud approach does bring with it a myriad of challenges. For example, public clouds deliver native services in proprietary formats, often necessitating the need for costly and sometimes unnecessary re-platforming. The need to re-skill a workforce compounds these challenges, as do the complex financials created by a multi-cloud model due to inconsistent SLA constructs across different providers. Lack of workload portability is another critical concern due to the previously mentioned proprietary format. This is further exacerbated by proprietary security and networking stacks, often resulting in lock-in and increased costs.

Cloud Without Chaos

Dell Technologies Cloud is not a single public cloud, rather a hybrid cloud framework which delivers consistent infrastructure and consistent operations, regardless of location. It is unique in the industry with its capability of running both VMware VMs and next-generation container-based applications consistently, irrespective of whether the location is private, public or edge. This consistent experience is central to enabling workload mobility, which itself is key to flexibility, agility and avoidance of lock-in.

Through combined Dell Technologies and VMware innovation, core services such as hypervisor, developer stacks, data protection, networking and security, are consistent across private, public and edge locations. Dell Technologies Cloud reduces the need for complicated and costly re-platforming activities associated with migration to a new cloud provider’s native proprietary services. Nonetheless, for organisations wishing to leverage native public cloud services, they can still do so while also benefiting from proximity to VMware-related public cloud services.

Consistent operations also reduce the strain on precious talent, by allowing companies to capitalize on existing skillsets. Organizations can consistently manage applications – regardless of location – and avoid the costly financial implications of re-skilling staff each time they choose a new cloud provider.

Looking at this from the lens of the developer and with modern applications in mind, thankfully, container standards span the industry, which mitigates the need for wholesale container format changes between clouds. Despite this, each cloud provider has an opinion on the ecosystem (container networking, container security, logging, service mesh, etc.) around containers in their native offerings, such as CaaS and PaaS. This bias can precipitate the need for tweaks and edits each time an application is to be moved to another cloud, effectively burning developer cycles. Instead, an organization can maximize developer productivity by employing turnkey, cloud-agnostic developer solutions, which are operationalized and ready for the Enterprise. The developer can write their application once and run it anywhere, without tweaks or edits required to suit a new cloud provider’s stack.

At the other end of the application scale, most Enterprise organizations own a significant portion of non-cloud-ready, non-virtualized workloads such as bare-metal and unstructured data. Through Dell Technologies extensive portfolio, these workloads are fully supported on various platforms and never considered an afterthought.

Likewise, a critical element of any organization’s cloud investment is its strategy around cloud data protection. Dell Technologies Data Protection Solutions covers all hybrid cloud protection use cases from in-cloud backup, backup between clouds, long-term retention to the cloud, DR to cloud and cloud-native protection.

Increased Agility, Improved Economics & Reduced Risk

Ultimately, Dell Technologies Cloud delivers increased business agility through self-service, automation and the unique proposition of true portability across private, public and edge locations. This agile, flexible and resilient foundation can enable Enterprise organizations to accelerate software innovation and in turn, quicken time-to-market.

In addition to the business agility and workload mobility gained from this consistent hybrid cloud model, companies can also improve cloud economics, as well as leverage multiple consumption options, irrespective of cloud location. Any modernization offers mitigation of business risk through eliminating technical debt, minimizing operational complexities and bypassing unknown and inconsistent future financials.

0 comments:

Post a Comment