If you’re in the data business, and even if you’re not, you know that high-profile cyberattacks in the recent past have resulted in the public leak of huge amounts of stolen data, including employee and customer personal information, corporate intellectual property, and even unreleased films and scripts.

In 2015, in response to the increased frequency and sophistication of cyberattacks on the financial sector, a not-for-profit, industry-led initiative was launched. Its aim was to protect the triad of financial institutions, their customers and general public confidence in the U.S. financial system, against a catastrophic event.

With the collaboration of hundreds of subject matter experts, and the backing of major players across the industry, the Sheltered Harbor initiative implemented a standard to protect customer data and provide access to funds and balances in the event of a critical system failure. Its mission complements Dell Technologies’ commitment to helping organizations and individuals build and protect their digital future, and it’s why we were the first solution provider to join.

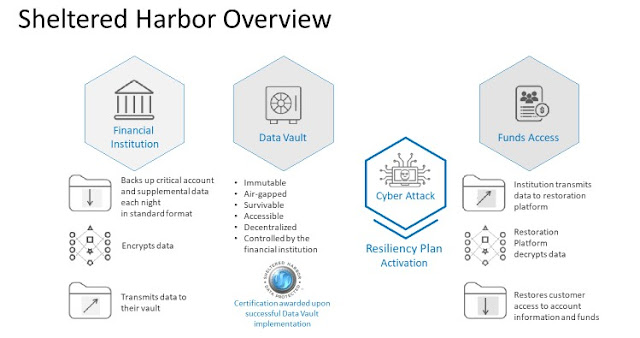

Sheltered Harbor developed an industry standard for data protection, portability, recovery and the continuity of critical services. Core to the standard, Sheltered Harbor participants back up critical customer account data each night to an air-gapped, decentralized vault. The data vault is encrypted and completely separated from the institution’s infrastructure, including all backups. Participants always maintain control of their own data.

The standard also includes resiliency planning and certification, but implementation of Sheltered Harbor is not intended to replace traditional disaster recovery or business continuity planning; rather, it is meant to run in parallel with these efforts to deliver a higher level of confidence for the fidelity of the U.S financial system.

On January 16, 2020, the OCC and FDIC issued a joint statement on heightened cybersecurity risk, outlining steps financial institutions should take to prepare for and respond to a heightened threat landscape. It also advised them to consider whether their backup and restoration practices meet industry standards and frameworks, such as Sheltered Harbor, to safeguard critical data.

In 2015, in response to the increased frequency and sophistication of cyberattacks on the financial sector, a not-for-profit, industry-led initiative was launched. Its aim was to protect the triad of financial institutions, their customers and general public confidence in the U.S. financial system, against a catastrophic event.

With the collaboration of hundreds of subject matter experts, and the backing of major players across the industry, the Sheltered Harbor initiative implemented a standard to protect customer data and provide access to funds and balances in the event of a critical system failure. Its mission complements Dell Technologies’ commitment to helping organizations and individuals build and protect their digital future, and it’s why we were the first solution provider to join.

Sheltered Harbor developed an industry standard for data protection, portability, recovery and the continuity of critical services. Core to the standard, Sheltered Harbor participants back up critical customer account data each night to an air-gapped, decentralized vault. The data vault is encrypted and completely separated from the institution’s infrastructure, including all backups. Participants always maintain control of their own data.

The standard also includes resiliency planning and certification, but implementation of Sheltered Harbor is not intended to replace traditional disaster recovery or business continuity planning; rather, it is meant to run in parallel with these efforts to deliver a higher level of confidence for the fidelity of the U.S financial system.

On January 16, 2020, the OCC and FDIC issued a joint statement on heightened cybersecurity risk, outlining steps financial institutions should take to prepare for and respond to a heightened threat landscape. It also advised them to consider whether their backup and restoration practices meet industry standards and frameworks, such as Sheltered Harbor, to safeguard critical data.

Source: Sheltered Harbor

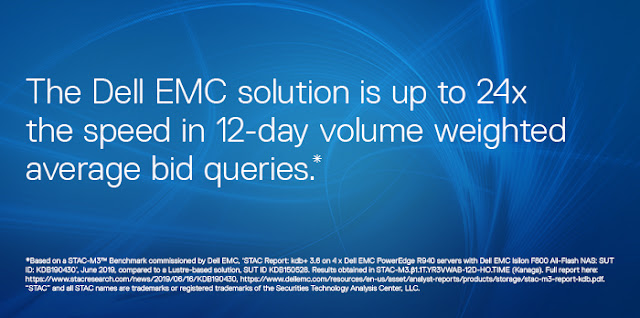

Dell Technologies is not only the first solution provider to join Sheltered Harbor, but also a committed partner to financial players across the globe. How do we help? Ask eight out of 10 global banks who are using Dell EMC Data Protection, or 14 of the top 15 U.S. banks doing the same. They know that the interconnected nature of the U.S. and global financial markets means that any disruption to banking services in one country or region can quickly cascade to others, causing a widespread financial panic.

As increasingly sophisticated ransomware and other cyberattacks continue to threaten customers in every industry, financial services are a huge target. The industry’s dependence on key systems to maintain normal operations clearly illustrates the importance of implementing proven and modern strategies and solutions to protect the most critical data.

The Dell EMC PowerProtect Cyber Recovery Solution for Sheltered Harbor helps participants achieve compliance with data vaulting standards and certification, plan for operational resilience and recovery, and protect critical data into the next Data Decade. Consider it your shelter from the potential storm of cyberattack.