Public clouds entered the mainstream because businesses were seeking a way to get up-and-running quickly in order to develop new applications and avoid the costs and management overhead associated with large CapEx IT investments. The industry has learned not all applications are ideal to run in the public cloud. Some users found out the hard way that performance, availability, scale, security and cost predictability could be difficult to achieve. Therefore, the cloud operating model is quickly becoming pervasive on-premises.

As cloud deployment options have evolved, leading-edge organizations have aspired to make strategic use of multiple clouds – public and private. With increasingly dispersed data, this calls for a distributed deployment model. The goal is to achieve multicloud by design rather than standing up disparate silos across clouds, also known as multicloud by default.

However, the distributed deployment model is not easy to deliver, since standing up datacenters is complex and requires significant financial commitments. How can organizations embrace a modernized datacenter model with private cloud? One way is to leverage as-a-service IT resources in colocation (colo) facilities wherever they need to deploy applications. In a recent IDC survey of U.S.-based IT decision makers, 51% indicated they were using hosting and colocation services for data center operations. In addition, 95% of responders indicated they plan to use such services by 2024.

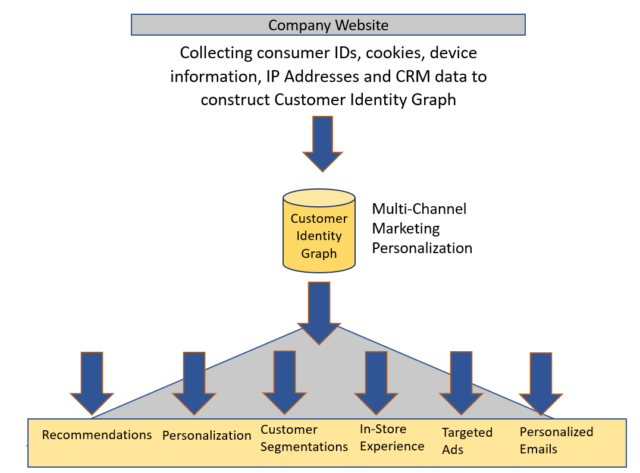

Enter Dell APEX and Equinix. Customers have the option to subscribe to interconnected Dell APEX Data Storage Services in a secure Dell-managed colocation facility, available through a partnership between Dell and Equinix. This solution enables a quick and easy deployment of scalable and elastic storage resources in various locations across the globe with high-speed access to public clouds, IT services and business collaborators, all delivered as-a-service.

To dig deeper into the trends toward as-a-service and colocation to address multicloud challenges, Dell commissioned IDC to develop a Spotlight Paper that features the Dell APEX and Equinix offer.

Here is a snapshot of some of the key benefits:

Dell APEX Delivers:

Simplicity. Gain a unified acquisition, billing and support experience from Dell in the APEX Console with simplified operations and a reduced burden of datacenter management.

Agility. Expand quickly to new business regions and service providers. Build a cloud-like as-a-service experience that offers fast time-to-value and multicloud access with no vendor lock-in.

Control. Leverage leading technologies and IT expertise on a global scale. Deliver a secure, dedicated infrastructure deployment with the flexibility to connect to public clouds while maintaining data integrity, security, resiliency and performance.

Equinix Services Provide:

Cloud adjacency. Dedicated IT solutions (cloud, compute, storage, protection) are directly connected and sitting adjacent, in close physical proximity to public cloud providers, software as-a-service providers, industry-aligned partners and suppliers.

Interconnected Enterprise. Digital leaders leverage Equinix to align Dell’s IT solutions with organizational and user demands across metro areas, countries and continents.

Intelligent Edge. Enterprises aggregate and control data from multiple sources – at the edge and across the organization – and then provide access to artificial intelligence, machine learning and deep analytic engines without moving the data. This deployment future-proofs access to data, radically reducing data egress costs while connecting to the engines of innovation now and in the future.

The combination of Dell APEX and Equinix interconnected colocation offers true differentiation and is key for organizations to truly achieve digital transformation goals in the multicloud world.

Source: dell.com