Saturday 27 April 2024

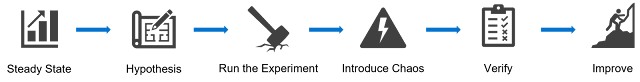

Embracing the Chaos: Database Resiliency Engineering

Thursday 25 April 2024

Build the Future of AI with Meta Llama 3

Use Cases

Dell PowerEdge and Meta Llama models: A Powerhouse Solution for Generative AI

Get Started with the Dell Accelerator Workshop for Generative AI

Tuesday 23 April 2024

AI Literacy – A Foundational Building Block to Digital Equity?

Equitable Access to Devices and Internet Connectivity is Still a Baseline Need

Digital Skills Training Programs Are Essential for People to Safely and Confidently Use AI

Communities Need Trusted Experts to Help People Access Digital Services

AI Literacy to Empower All

Saturday 20 April 2024

Scale Up or Out with AMD-Powered Servers

Better Scalability, Sustainability and Performance

- 2X higher performance. The new AMD EPYC processors deliver up to 107% higher processing performance and up to 33% more storage capacity than their predecessor. That leads to faster business insights and improved application performance across the board.

- Denser and more efficient. The fourth-generation AMD EPYC processors deliver 50% more density in the core than previous generations, resulting in 47% higher performance per watt for better energy efficiency. In addition, Dell’s Smart Cooling technology reduces the server’s energy consumption through improved airflow and optimized cooling features.

- Easier to manage. Dell’s management tools, such as OpenManage Enterprise Power Manager and integrated Dell Remote Access Controller (iDRAC), make it easier than ever before to manage and automate bare metal servers, from correcting configuration drifts to optimizing energy usage.

- More secure. PowerEdge servers feature a silicon-based root of trust that protects against external attacks. AMD EPYC processors also feature Infinity Guard, which helps reduce the threat surface of the server during operation.

Flexible Configurations for a Broad Range of Applications

Thursday 18 April 2024

Experience Choice in AI with Dell PowerEdge and Intel Gaudi3

Harnessing Silicon Diversity for Customized Solutions

Technical Specifications Driving Customer Success

Strategic Advancements for AI Insights

Technological Openness Fostering Innovation

Unique Networking and Video Decoding Capabilities

Dell PowerEdge XE9680 with the Gaudi3: A Visionary Step Forward in AI Development

Saturday 13 April 2024

Connected PCs: Expanding Dell’s Relationship with AT&T

Addressing Modern Connectivity Demands

Security Concerns in the Age of Hybrid Work

Enhanced Security with Connected PCs

Seamless Integration and Enhanced Performance

The Carrier Referral Program: Simplifying Connectivity

Empowering Connectivity for the Future

Tuesday 9 April 2024

Plan Inferencing Locations to Accelerate Your GenAI Strategies

Leveraging RAG to Optimize LLMs

Considerations for Where to Run Inferencing

Inferencing On-premises Can Save Costs and Accelerate Innovation

Leverage a Broad Ecosystem to Accelerate Your GenAI Journey

Saturday 6 April 2024

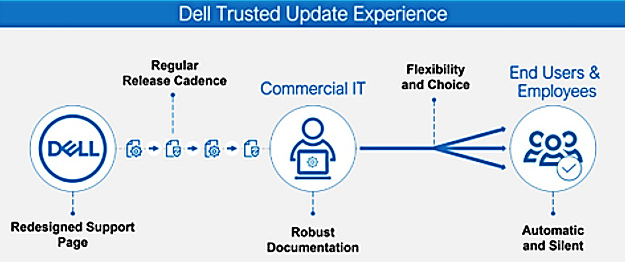

The Most Manageable Commercial PCs – From Dell

- Publishes a device drivers and downloads release schedule, so IT admins can deploy fleet-wide device-updates on a predictable timeline.

- Performs integrated validation of all driver and BIOS modules in an update. IT admins

- Securely configure BIOS settings, on a fleet of Dell commercial PCs, natively in Microsoft Intune.

- Configure unique per-device BIOS passwords, on a fleet of Dell commercial PCs, natively in Microsoft Intune.

Thursday 4 April 2024

Dell Technologies Powering Creativity from Studio to Screen

Tuesday 2 April 2024

Reduce the Attack Surface

- Apply Zero Trust principles. Zero Trust is a security concept centered on the belief that organizations should not automatically trust anything inside or outside their perimeters and instead must verify everything trying to connect to their systems before granting access. Organizations can achieve a Zero Trust model by incorporating solutions like micro-segmentation, identity and access management (IAM), multi-factor authentication (MFA) and security analytics, to name a few.

- Patch and update regularly. Keeping operating systems, software and applications up to date with the latest security patches helps address known vulnerabilities and minimize the risk of exploitation.

- Ensure secure configuration. Systems, networks and devices need to be correctly configured with security best practices, such as disabling unnecessary services, using strong passwords and enforcing access controls, to reduce the potential attack surface.

- Apply the principle of least privilege. Limit user and system accounts to only have the minimum access rights necessary to perform their tasks. This approach restricts the potential impact of an attacker gaining unauthorized access.

- Use network segmentation. Dividing a network into segments or zones with different security levels helps contain an attack and prevents lateral movement of a cyber threat by isolating critical assets and limiting access between different parts of the network.

- Ensure application security. Implementing secure coding practices, conducting regular security testing and code reviews and using web application firewalls (WAFs) help protect against common application-level attacks and reduce the attack surface of web applications.

- Utilize AI/ML. Leverage these capabilities to help proactively identify and patch vulnerabilities, significantly shrinking the attack surface. AI/ML tools can help organizations scale security capabilities.

- Work with suppliers who maintain a secure supply chain. Ensure a trusted foundation with devices and infrastructure that are designed, manufactured and delivered with security in mind. Suppliers that provide a secure supply chain, secure development lifecycle and rigorous threat modeling keep you a step head of threat actors.

- Educate users and promote awareness. Training employees and users to recognize and report potential security threats, phishing attempts and social engineering tactics helps minimize the risk of successful attacks that exploit human vulnerabilities.

- Use experienced professional services and partnerships. Collaborating with knowledgeable and experienced cybersecurity service providers and forming partnerships with business and technology partners can bring in expertise and solutions that might not be available in-house. This can enhance the overall security posture of an organization.

Saturday 30 March 2024

Harnessing NVIDIA Tools for Developers with Precision AI-Ready Workstations

Key Challenges

- Hardware setup. Configuring hardware for deep learning and GenAI tasks is typically intricate and technical, involving multiple steps that consume developers’ time and resources, diverting their focus from actual model development.

- Portability freedom. Achieving the flexibility to migrate developments and workloads to different locations requires substantial effort and technical proficiency. Challenges encountered on the source machine may resurface on the target, particularly if the environment is different. Dependencies and variables play a crucial role in determining how projects perform across diverse environments.

- Workflow management. Identifying, installing and managing elements of AI workflows requires many cycles. Developers are faced with manually tracking project elements, and the lack of automation and user-friendly interfaces impact productivity.

NVIDIA Tools for Developers

Powering AI Development

- Simplified. Precision workstations simplify the AI journey by enabling software developers and data scientists to prototype, develop and fine-tune GenAI models locally on the device.

- Local environment and control. Within a sandbox environment, developers have full control over the configuration and resources, allowing for easy customization and upgrades. This provides for predictability, which is crucial for informed decision-making and builds confidence in the AI models.

- Tailored and scalable. Depending on the requirements for GenAI workloads, workstations can be designed and configured to support up to four NVIDIA RTX Ada Generation GPUs in a single unit, such as the Precision 7960 Tower. This provides users with substantial performance across their AI projects and streamlines both training and development phases.

- Trusted. Running AI workloads at the deskside allows for data to stay on-premises, providing greater control over data access and minimizing the potential exposure of proprietary information.

Partnering for Success

Thursday 28 March 2024

The Telecom Testing Dilemma: Dine Out or Take Home?

We’re Your Resident Experts

End-to-end Services . . . and Much More

We’ll Even Take the Next Steps

Tuesday 26 March 2024

Detect and Respond to Cyber Threats

- Monitoring. Scanning network and system activities using security tools and technologies like intrusion detection systems (IDS), intrusion prevention systems (IPS), log analysis and threat intelligence feeds.

- Threat detection. Analyzing collected data to identify patterns, anomalies, and indicators of compromise (IoCs) that may indicate a potential cyber threat. This includes recognizing known attack signatures as well as identifying anomalous behavior or deviations from the norm.

- Alerting and notification. Generating alerts and notifications to security personnel or a security operations center (SOC) when potential threats or incidents are detected. These alerts provide early warning to prompt investigation and response.

- Incident response. Initiating a response plan to investigate and mitigate confirmed security incidents. This involves containing the impact, identifying the root cause and implementing necessary actions to restore systems and prevent further damage with MDR type tools.

- Utilization of AI / ML. Detecting cyber threats through real-time analysis of unusual data patterns or behaviors. These technologies also facilitate rapid response by assessing threat severity, predicting impacts, automating certain defensive actions and scaling security practices, thus minimizing potential damage.

- Forensic analysis. Conducting detailed analysis of the incident to understand the attack methodology, determine the extent of the breach, identify affected systems or data and gather evidence for potential legal or disciplinary actions.

- Remediation and recovery. Taking steps to remediate vulnerabilities, patch systems, remove malware and implement enhanced security measures to prevent similar incidents in the future. Restoring affected systems and data to their normal state is also part of the recovery process.

Saturday 23 March 2024

Dell and AMD: Redefining Cool in the Data Center

You might be surprised to learn that one of the main challenges facing data centers today isn’t scalability or security. It’s energy. As servers become exponentially more powerful, they require even more power to run them. This at a time when energy costs are fluctuating and most enterprises are committed to reducing their energy usage through sustainable business practices. Fortunately, Dell Technologies has developed some really cool innovations around energy efficiency that make our PowerEdge servers featuring AMD’s fourth generation EPYC processors both more scalable and more sustainable than ever before.

Keeping Up with Rising Demands…without Catching Heat

There’s a lot more to Dell’s PowerEdge servers than just processors and storage drives. For example: air. And while you may not think about airflow when you think of a server chassis, at Dell we spend a lot of time thinking about how to optimize and improve airflow to keep energy costs low and processing performance at peak levels. Our unique Smart Cooling technology uses computational fluid dynamics to discover the optimal airflow configurations for our PowerEdge servers. Dell’s Smart Flow design, for example, enables PowerEdge servers to run at higher temperatures by increasing airflow in the server chassis, even as it reduces fan energy consumption by as much as 52%.

Improved airflow is only one aspect of our Smart Cooling initiative. We’ve also forged innovations in direct liquid and immersion cooling, which you can read more about here. And we continue to work with our partners to improve the sustainability and energy efficiency of our products through joint development initiatives—like the PowerEdge C6615 with AMD Siena, which maximizes density and air-cooling efficiency for data centers where footprint expansion is not an option.

Dell Delivers Energy Savings You Can See

Now, you may be thinking, “Those innovations sound impressive, but how do I know they’re actually saving me money and reducing energy consumption?” That’s where Dell’s OpenManage Power Manager comes into play. OpenManage Power Manager allows organizations to view and manage their energy consumption, calculate energy cost savings and track other performance metrics from their PowerEdge servers through an easy-to-navigate graphical user interface (GUI). Power Manager monitors server utilization, greenhouse gas (GHG) emissions, energy savings (based on local energy rates) and more to ensure that you’re getting the most from your PowerEdge servers while using the least amount of energy (known as power usage effectiveness or PUE).

Another tool for energy management is the integrated Dell Remote Access Controller (iDRAC). iDRAC works in conjunction with Dell Lifecycle Controller to help manage the lifecycle of Dell PowerEdge servers from deployment to retirement. It also provides telemetry data generated by PowerEdge sensors and controls, including:

◉ Real-time airflow consumption (in cubic feet per minute or CFM) with tools to remotely control airflow balancing at the rack and data center levels.

◉ Air temperature control from the inlet to exhaust.

◉ PCIe card inlet temperature and airflow.

◉ Exhaust temperature controls based on hot/cold aisle configurations or other considerations.

Achieving Sustainability at Scale

You can choose Dell PowerEdge servers for their legendary reliability and exceptional scalability. Many hyperscalers and enterprises do just that. But it’s nice to know that you don’t have to choose between doing what’s best for your data center and doing what’s best for the environment. With Dell PowerEdge servers featuring AMD EPYC processors, companies can increase their server performance per watt for scale workloads, reduce the amount of energy they use in their data centers, measure that energy usage in real time, automate energy-tracking policies such as power caps and proactively address energy issues before they present a problem. The complete PowerEdge portfolio for scale is available in multiple configurations and chassis heights with air-cooling and DLC options that enable significant energy savings and greater computational efficiency compared with previous server generations.

As more businesses look to balance scalability with sustainability, there’s never been a better time to consider Dell PowerEdge servers powered by AMD for your data center. PowerEdge servers with AMD EPYC processors deliver the consolidation opportunities and scalability you need to move forward, without getting burned by high energy costs. Now, how cool is that?

Source: dell.com