Saturday, 29 October 2022

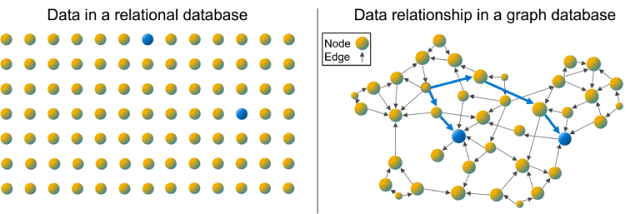

Analyze Data Relationships at Speed and Scale

Thursday, 27 October 2022

Delivering IT as Easy as Ordering Takeout

Use a Self-service Marketplace to Handle Day-to-day Requests

Let Automation Do the Heavy Lifting

Personalize the IT Experience for Enhanced Utilization

Optimize IT Operations and Experiences with Strategic Integrations

Empower Your Workforce with Consumer-grade Experiences

Sunday, 23 October 2022

Are You Familiar with the Monster in the Cloud?

While the world gets to put away their scary stories and sleepless nights come November, cybersecurity teams continually wrestle with fears of a security breach year-round.

Over the last 10 years, a few major shifts have happened. Businesses embraced digital transformation, gradually adopting cloud-based applications, software as-a-service (SaaS) and infrastructure as-a-service (IaaS). Then, the COVID-19 pandemic pushed organizations to remote work and dramatically changed the network landscape – including where data and apps were managed. Today, our hybrid, hyper-distributed world brings new challenges to security teams as more corporate data continues to be distributed, shared and stored outside of on-prem data centers, into the cloud. Despite its many benefits, work-from-anywhere exposes organizations to new vulnerabilities – new monsters – that must be slayed. The old “castle and moat” security model, which focused on protecting the data center via a corporate network, is essentially obsolete.

Enter a new security model called a Secure Access Service Edge (SASE) architecture. SASE brings together next-generation network and security solutions for better oversight and control of the IT environment in our cloud-based world. How do you enable a SASE architecture? With Security Service Edge (SSE) solutions. SSE solutions enable secure access to web, SaaS, IaaS and cloud apps for a company’s users, wherever they are. The core products within SSE are:

◉ Secure Web Gateway (SWG) for secure web & SaaS access

◉ Cloud Access Security Broker (CASB) for secure cloud app access

◉ Zero Trust Network Access (ZTNA) for secure private app access (versus network access)

Saturday, 22 October 2022

Adapting to Climate Change

Friday, 21 October 2022

Is Data Scientist Certification Worth It? Spoiler Alert: Yes

Most people want to learn how to use Data Scientist certification to advance and improve their careers. So, it would be best if you began considering achieving the Data Scientist certification. It would benefit if you used your expertise to determine yourself for a particular position because the IT industry is already characterized by intense competition.

People work toward earning Data Scientist certification, and your professional life will significantly improve through the certification. It is entirely up to you which certification you decide to pursue and concentrate on for success, like certification.

Various organizations offer numerous certifications, but you should only select those with market validity, such as the Data Scientist certification. Beyond typical work, their work shows these Data Scientist certified skills and abilities.

These certified skills have practical uses and can be used in various situations, such as day-to-day living, positive social impact, and getting in more money. Every business today seeks to hire Data Scientists certified individuals to improve their organization's performance.

Who Can Become a Data Scientist? Is It Suitable for You?

Data Science Certifications provide you with proof of the qualities and knowledge you have. If you have achieved excellence in the field and earned top certifications, then trust me, no one can stop you from getting hired as a data scientist.

The widespread use and presence of data and its relevance in today's digital world have given birth to the surging need for experts and professionals in data science. Data science has become an indispensable tool for all industries and businesses to gain valuable insights to amplify and improvise their operation, stand out in their field, and outperform their contemporaries.

The demand for data scientists has paved the way for graphical user interface tools that do not need expert coding knowledge. You can quickly build data processing models with a solid understanding of algorithms. However, even if you do not have strong coding knowledge and a remarkable degree in data science, you can still become a data scientist. You can be a data scientist without a degree and good learning capabilities.

New technologies allow organizations easily collect large amounts of data. But, they often do not recognize what to do with this information. Data scientists use advanced methods to assist bring value to data. They collect, organize, visualize, and analyze data to find patterns, make decisions, and solve problems.

Data Scientists require strong programming, visualization, communication, and mathematics skills. Typical job responsibilities include gathering data, creating algorithms, cleaning and validating data, and drafting reports. Nearly any organization can advantage from the contributions of a trained data scientist. Potential work sectors include healthcare, logistics, banking, and finance.

How Can Experts Help You Learn Data Science?

Thorough knowledge of NoSQL, Hadoop, and technical Python is required to be called a data scientist because you need data systems, such as Hado, plus a whole toolchain and Python if you need to apply for several data science positions. The growing pace of the industry's demand for faster analysis calls for people to recognize more about data science means that professionals can get on board and begin learning immediately.

Simultaneously, employing technical knowledge to live in a static analysis rather than manual processes is possible. Most of the time, Python and R have transitioned from being a way of research to being preferred because they have made it possible to automate most of it.

Expert subscription offers candidates the ability to keep learning new skills, resources, and technology while also ensuring that they are up to date on projects they are a member of on the team they are working on and interested in.

Data Science technology significantly influences several applications, most in the healthcare and scientific fields. As a result, massive big data analytics has become a strategic and leading focus in all companies.

End Notes

Data science credentials are not useless. Many platforms offer certificates for data science courses since it is a decent way for the students to prove that they are effectively involved with learning new expertise. Recruiters appreciate seeing candidates continually attempting to develop themselves so that credentials can help your request for employment.

However, the impact will probably be minor if there is any impact whatsoever. It is essential to select whether you can perform the job and that information they will look at primarily by assessing your project's portfolio.