When IT launched an Enterprise Data Science platform in 2020 to help data scientists build artificial intelligence (AI) and machine-learning-driven processes (ML) at Dell, a key challenge was whether the 1,800 team members already engaged in data science across the company would adopt it.

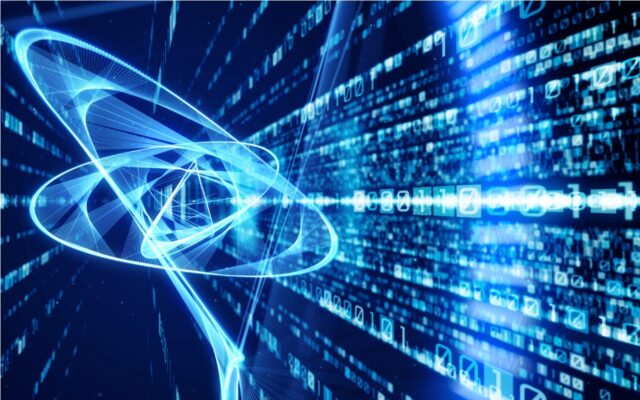

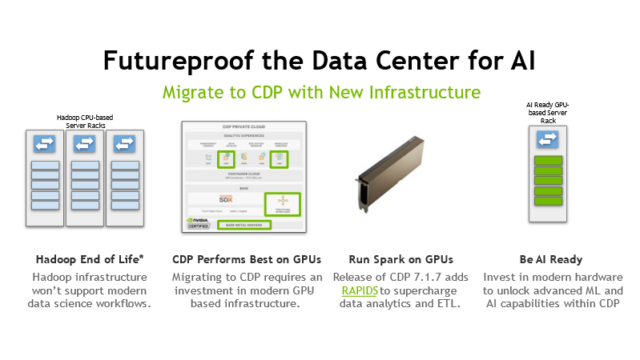

With platform use up by 50 percent over the past year and growing, as well as an expanding demand for more data science capabilities, Dell IT is continuing to invest in AI and ML. We are now looking to a much broader challenge on the horizon: making everything at Dell AI- and ML-enabled across all our applications.

Central to realizing that vision is making the data science process better and faster by improving data scientists’ end-to-end experience.

What we’ve learned

In an earlier blog, I described the creation of the Enterprise Data Science platform—Democratizing Data Science, A Federated Approach to Supporting AI and ML. That platform is now supporting more than 650 users and is on course to reach 1,000 users by the end of this year. As a result, the Enterprise Data Science team has been looking more holistically at the data science experience. We’ve discovered several key insights that are helping to focus our ongoing investments.

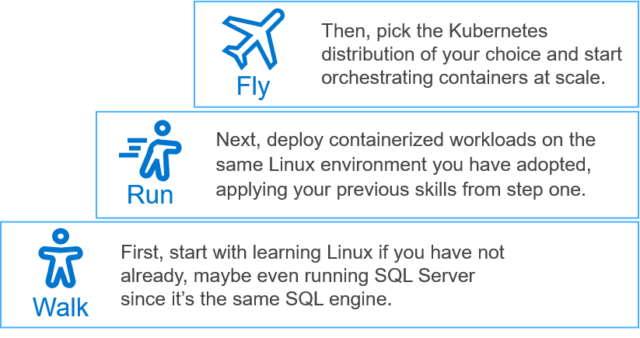

First, we found that with data science, one size doesn’t fit all. There are three types of data science users—teams that are just forming, nascent teams that have delivered first wins, and mature teams seeking advanced capabilities. While the first type of user wants something that is simple and ready to go, the third type wants a lot of customization that will help them access large compute pools or deploy and integrate models.

We are working to meet these diverse needs with standardization, blueprints and automation. And we are meeting them in another critical way as well, by providing an IT team that data scientists can turn to directly for advice and help.

Although there are different data science personas, all have a few common needs. They all start with data—the need to discover it, acquire it, process it securely and analyze it for patterns and insights. They typically work in an iterative fashion, i.e. get data, analyze the data, interpret it and validate results and then go back to the first step and get more data. It is a “rinse and repeat” cycle to find what supports their hypothesis, which generally stems from a business opportunity identified by a subject matter expert and handed off to engineers and data scientists. The faster this cycle happens to validate hypotheses, the better the results the data science team can deliver.

The Enterprise Data Science platform team is working on tools that will help speed up these repetitive processes—in particular, automation of data access and processing, which is where data science practitioners spend the most time.

Currently, data scientists must figure out on their own where to find the data they need across Dell’s various databases and how to access it. They might search through tables or ask around to find what they are looking for. Imagine hundreds of people doing that repeatedly and in silos. As they do this work, they are effectively finding and creating value from information by defining what is the data that is most useful to optimize a process.

Our goal is to help data scientists to move faster, as well as to capture the valuable data they create. To achieve this, we are collaborating with our data scientists to identify the top areas they get data from and come up with a solution that will standardize the process to discover, acquire and process data from these locations. The idea is to allow them to access data instantaneously and securely from our Dell Data Lake and any other main data repositories across the company.

Once the data scientists obtain the data, we provide them the support to version, document, test and catalog the new data features they create, to make them available across other data science teams.

All our capabilities are driven by APIs (Application Programming Interfaces) which we bundle into internally developed SDK Packages (Software Development Kit) for data scientists. This helps them easily engage with our technology through the language of their choice (e.g. Python) in a very simple and efficient manner.

Getting to AI models faster

Beyond the data piece of their work, data scientists share common needs around the other steps of their development process, including using algorithms to solve the problem they are tackling and then building and training the model that delivers the desired result.

As we’ve scaled our support of data scientists, the team has identified that most data scientists set up very similar algorithms for specific functions in their models, and that invariably, they begin their work using small amounts of data and then focus on making their models scale with time. Yet, we see each one of our data science team members starts from a blank slate on each project.

The Enterprise Data Science team has a sub-team that specializes in DevOps for AI and ML, working to provide templates and infrastructure setups to help data scientists go faster in getting their models up and running and scaling them more efficiently. Our aim is to enable projects to go faster from ideation to production. To achieve this, our software engineering team is working closely with data scientists to first understand and drive several use cases to success, and then identify where the process is repetitive and create solutions.

Our initial work has guided us to begin creating baseline algorithms, which means data scientists won’t have to start from scratch in creating their models each time. In a similar fashion, data scientists can tap into blueprints that help them easily parallelize workloads, leverage specialized compute instances (such as GPUs) and help them train and re-train algorithms at scale. Our first templates are included on every workspace in our AI/ML platform and they just have to open them and make their modifications to get going. Our initial data tells us our users can go from idea to production six to 10 times faster using these tools.

Smoothing out the last mile

From a customer and business perspective, the most important step in data science is the “last mile” of the process— when AI and ML models are implemented into Dell applications to gain value from new insights and innovations they bring. Here too, the Enterprise Data Science platform team is working to add speed and efficiency by providing templates, training and support.

To accelerate these tasks, the team places a lot of focus on skills transfer and training. On the one hand, we have to train data scientists to build more deployment-ready models, using standardized technology that our engineers can understand and quickly implement into apps. On the other hand, we have to help engineering teams become more familiar with data science technologies to smooth out deployment at production scale.

The team is currently focused on seven production engagement cases for pushing new data science models into IT apps. This will help our engineers to define patterns toward standardization and creating common architecture. We hope to reduce such implementations, which could previously take several months, down to six to eight weeks by the second half of 2021.

Data science, AI and ML are the areas of technology that change the most and at the same time represent a big opportunity for us to improve our customer experience and business outcomes. We have made great strides in improving the data science process that is fueling innovation across Dell’s business units and will continue to develop standard and automated capabilities to add efficiency.

But perhaps our biggest success is supporting data science in a much more direct, non-automated interaction. When data scientists and engineers have questions, they can reach out and someone in IT will pick up the phone. And we learn from each interaction. That’s what our Enterprise Data Science team is all about.

Source: delltechnologies.com