Saturday 30 December 2023

Artificial Intelligence is Accelerating the Need for Liquid Cooling

Thursday 28 December 2023

This Ax Stinks – Accelerating AI Adoption in Life Sciences R&D

In the world of life sciences research and development, the potential benefits of artificial intelligence (AI) are tantalizingly close. Yet, many research organizations have been slow to embrace the technological revolution in its entirety. Specifically, while they might leverage AI for part of their process (typically “in model” or predictive AI), they hesitate to think about leveraging improved techniques to review existing data or generate potential new avenues of research. The best way to understand the problem is by using an analogy of an ax versus a chainsaw.

The “Ax vs. Chainsaw” Analogy

Imagine a team of researchers tasked with chopping down trees, each equipped with an ax. Over time, they develop their own skills and processes to efficiently tackle the job. Now, envision someone introducing a chainsaw to the team. To effectively use the chainsaw, the researchers must pause their tree-chopping efforts and invest time in learning this new tool.

What typically happens is one group of researchers, especially ones who have found success with the old methods, will resist, stating they are too busy chopping down trees to learn how to use a chainsaw. A smaller subset will try to use the new tools incorrectly, and therefore find little success. They will state, “this ax stinks.” This is where the early adopters and those most interested in finding new ways of doing things will become the most productive on the team. They will stop, learn how to use the new tool and rapidly surpass those who did not take the time to do so.

In our analogy, it is important to acknowledge the chainsaw, representing AI tools, is not yet perfectly designed for the research community’s needs. Just like any emerging technology, AI has room for improvement, particularly in terms of user-friendliness and accessibility—and especially so for researchers without a background in IT. Dell Technologies is working with multiple organizations to improve tools and increase their usability. However, the current state of affairs should not deter us from embracing AI’s immense potential. Instead, it underscores the need for ongoing refinement and development, ensuring AI becomes an even more powerful and accessible tool in the arsenal of life sciences research and development.

The Potential of AI in Life Sciences R&D as a Catalyst, Not a Replacement

By transcending conventional model predictive AI methods and harnessing the power of extractive and generative AI (GenAI), the discovery of enhanced correlations can uncover remarkable insights concealed within overlooked and underutilized existing data, surpassing the ordinary review process and potentially uncovering new avenues of exploration.

It is crucial to understand AI is not here to replace researchers but to empower them. The technology adoption curve still applies, and we are in the early adopter phase. However, early adopters in the pharmaceutical space can gain a significant advantage in terms of accelerated time to market. AI is wonderful at finding correlation and completely inadequate at causation.

Create an AI Adoption Plan, Select the Right Partners and Measure Your Success

To kickstart AI adoption, research organizations across all of life sciences need to have a structured plan. My recommendation is to start by prioritizing AI adoption into specific targets with clear and well-defined pain points that are addressable. In particular, focus on areas where you can measure success. In addition, be constantly vigilant in ensuring data security. Organizationally, this goes beyond compliance—consider protecting data (and the data about your data) at all times.

In addition, select the correct partners who are here to help you in accelerating adoption. At Dell, we have been working overtime to make sure our solutions and AI and GenAI services align with your needs. We’ve made sure our solutions and services focus on a multicloud, customizable infrastructure that prides itself on your organization maintaining data sovereignty.

Lastly, measure your success and expand. It is important that leadership is involved, that your KPIs meet requirements and that everyone in the organization is comfortable with embracing failure and adapting to find success.

For life sciences research organizations, the key decision is to accelerate adoption of AI across their organization. Embracing AI tools and methodologies is not just an option; it is a necessity to stay competitive in the ever-evolving landscape of scientific discovery and therapeutic development. Dell Technologies is here to help with that process and standing ready to work with your organization wherever you are on your journey. Remember, those who master the chainsaw of AI will undoubtedly become the trailblazers in the field, ushering in a new era of accelerated progress.

Source: dell.com

Tuesday 26 December 2023

Unleash Multicloud Innovations with Dell APEX Platforms and PowerSwitch

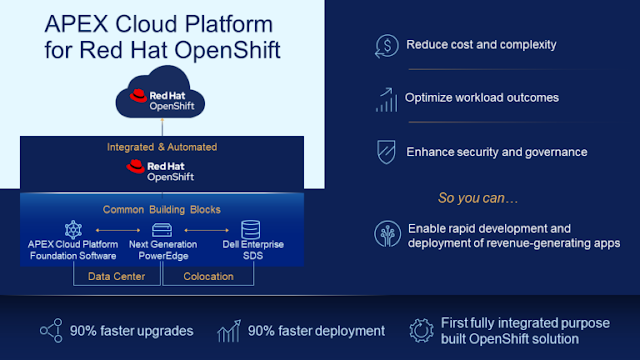

Dell Networking for Dell APEX Cloud Platform for Red Hat OpenShift

Put Dell APEX Cloud Platform for Red Hat OpenShift to Work for You

- Seamless integration of Dell networking with Dell APEX Cloud Platform for Red Hat OpenShift, which simplifies deployment, management and maintenance, reducing the risk of inter-operability issues.

- Optimized and overall better system performance when Dell APEX Cloud Platform for Red Hat OpenShift is deployed with Dell Networking.

- Single point of support across overall deployment which provides consistent service experience.

- Dell APEX Cloud Platform for Red Hat OpenShift solution with Dell networking offers competitive pricing compared to standalone components from various vendors.

- Reduced complexity and efficient management translate into lower operational expenses (OpEx).

- Regular and seamless system updates across the ACP for OpenShift ecosystem.

Saturday 23 December 2023

Dell and Druva Power Innovation Together

Momentum, Growth and Market Trust

Strengthening Security and Ransomware Resilience

- Nuvance Health achieved 70% faster backup times while scaling protection effortlessly and cutting costs for safeguarding Microsoft 365 data, SQL databases and 1,600 VMware virtual machines.

- The Illinois State Treasury Department reduced platform management time by 80%, securing over $50 billion in assets and sensitive data and bolstering its security posture.

- TMS Entertainment, Ltd. in Japan eliminated its data center footprint and resolved backup errors instantly, enhancing operational efficiency.

Friday 22 December 2023

Boost Efficiency and Update Your VxRail for Modern Demands

Unmatched Performance for Complex Workload Management

Easily Embrace AI with VxRail

Resource Optimization and Cost-Effective Operations

More Operational Benefits in CloudIQ for VxRail

No Better Time to Refresh

Thursday 21 December 2023

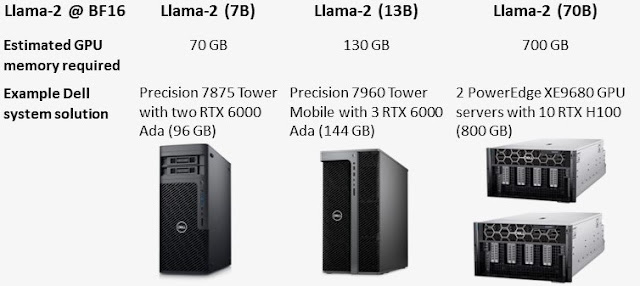

How to Run Quantized AI Models on Precision Workstations

Wednesday 20 December 2023

Become the Enabler of Next Generation Data Monetization

Data as an Economic Asset

Why SD-WAN SASE?

Simplify the Edge with the Power of Three

Tuesday 19 December 2023

Secure Data, Wherever it Resides, with Proactive Strategies

Multi-layered Defense for a Resilient Cloud Strategy

Strengthening Network Segmentation

Evolving Toward Continuous Vulnerability Management

Remain Vigilant and Have a Plan in Place

Saturday 16 December 2023

Empowering Generative AI, Enterprise and Telco Networks with SONiC

Generative AI Advancements

Enterprise and Service Provider Use Case Enhancements

Security Advancements

Enablement and Validation with Dell Hyperconverged Infrastructure and Storage Solutions

Unified Fabric Health Monitoring

Thursday 14 December 2023

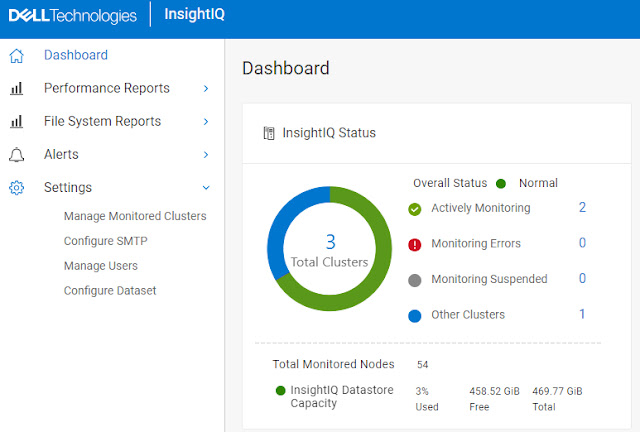

InsightIQ 5.0: Driving Efficiency for Demanding AI Workloads

Simplified Management

Expanded Scale and Security

Automated Operations

Tuesday 12 December 2023

Precision Medicine, AI and the New Frontier

- The challenges and opportunities of applying AI to precision medicine, which aims to provide personalized and effective healthcare based on data and evidence.

- The need for data quality, clinical governance and ethical oversight to ensure AI solutions are reliable, safe and beneficial for patients and providers.

- A proposed five-step process for healthcare providers to explore and implement AI solutions in their organizations, involving data analysis, clinical identification, ROI estimation, MVP modelling and pilot testing.

- Review the historical data set and define what intelligence you can obtain by leveraging this existing data. Most organizations do not have this expertise in-house and would need to liaise with a specialist team of clinical informaticians and data experts to understand the current environment.

- Work with the clinical team to identify key client groups or system workflows where care (defined in terms of the IHI quadruple aim) can be improved by leveraging data more effectively.

- Research the potential return on investment by incorporating the data analysis in step one with the clinical target identified in step two.

- Finally, the solution will be modeled (MVP), and a pilot will be conducted with a view to a fast deployment into the live clinical environment.

- Continue back-testing and working with the clinical teams to ensure relevance and impact.

Saturday 9 December 2023

Generative AI Readiness: What Does Good Look Like?

To Achieve High GenAI Readiness, You Need a Framework

- Strategy and Governance

- Data Management

- AI Models

- Platform Technology and Operations

- People, Skills and Organization

- Adoption and Adaptation

Drive GenAI Strategy with Business Requirements, Use Cases and Clear Governance

Get Your Data House in Order

Match the Model to the Use Case and Continuously Monitor Performance

Build a Solid Technology and Operational Foundation

Level Up Skills and Organization

Manage Adoption and Adaptation

Embark on Short-term GenAI Opportunities and Advance GenAI Readiness

Tuesday 5 December 2023

Simplifying Artificial Intelligence Solutions Together with EY

The level of market excitement around generative AI (GenAI) is hard to exaggerate, and most agree this fast-developing technology represents a fundamental change in how companies will be doing business going forward. Areas such as customer operations, content creation, software development and sales will see substantive changes in the next few years. However, this leap in technology does not come without challenges—around how to deploy and maintain the infrastructure required in addition to ethical, regulatory and security issues.

To help guide companies in their journey toward transformation, EY and Dell Technologies are collaborating on leveraging EY.ai for developing joint solutions. The EY.ai platform brings together human capabilities and AI to help organizations transform their businesses through confident and responsible adoption of AI. With a portfolio of GenAI solutions and tools for bespoke EY services, customers can confidently enable their transformational AI opportunities.

Take the financial services industry as a great example. A GenAI deployment could quickly help firms with intricate data analytics—taking complex data sets, running queries and identifying trends (that were previously hidden) via much more comprehensive analyses.

Using Proven Dell Technology

Underpinning solutions in EY’s new AI-driven solutions portfolio is industry-leading Dell infrastructure, such as the recently announced Dell Validated Design for Generative AI. With a proven and tested approach to adopting and deploying full-stack GenAI solutions, customers can now prototype and deploy workloads on purpose-built hardware and software with embedded security optimized for generative AI use case requirements. In addition, EY and Dell’s joint Digital Data Fabric methodology and Dell’s multicloud Alpine platform mean customers can be ready to optimize their data across public cloud, on-prem and edge AI applications.

Deliver on Your Priorities Faster and at Scale

One of the largest hurdles for organizations’ AI visions is aligning business demand for innovation and value creation with IT deployment capabilities. GenAI deployments will frequently be a mix of edge, dedicated cloud (aka on-prem) and public cloud layers. With growing supply chain lead times, competition for shared GenAI-specific infrastructure, expanding security concerns and other headwinds, organizations need to unlock the benefits of a multi-tiered infrastructure model to drive sustainable value creation. Dell Technologies and EY’s joint solutions on EY.ai, using Dell validated designs, simplify the AI pathways to business impact. These solutions accelerate an organization’s ability to focus scarce resources on GenAI business results and not on the constraints of IT deployment patterns.

The Smartest Way to Get Started

Accelerate speed-to-value by starting with a focused, yet high-impact, proof-of-concept to demonstrate benefits quickly, while in parallel setting a strategy and establishing a trusted GenAI foundation to rapidly meet expanding needs of the business.

Source: dell.com