Imagine an orchestra without a maestro. Each musician playing their own tune and chaos reigning supreme. Now, imagine building a modern telecom cloud network without an engineered solution designed to meet today’s open standards or capable of setting the stage for tomorrow’s technological advancements. You’d be dealing with the equivalent of a symphonic mess.

Just as there are hurdles an orchestra encounters while striving to create the perfect performance, there are

challenges communication service providers (CSPs) face building and deploying a modern cloud network. CSPs must contend with interoperability issues as they move toward a horizontal cloud stack. There are resource management challenges across infrastructure lifecycles and inefficiencies created for day zero through day two operations. Then there is the pressure of rapid technological changes and having the ability to quickly adopt the latest technologies into their infrastructure. This includes 5G, cloud-native technologies and open-source software that disaggregate network functions from the underlying hardware.

Orchestrating a telco cloud network efficiently across densely populated urban areas and out to far-lying rural landscapes, while keeping costs in check, is no small feat. That’s precisely where Dell Technologies and Dell Telecom Infrastructure Blocks step in to take center stage.

The Composers: Dell’s Engineering Team and Cloud Platform Partners

In this symphony of telecom innovation, the Dell Technologies engineering team takes on the role of the composer. They work in harmony with leading telecom cloud platform vendors, like Red Hat and Wind River, to craft the perfect score for the Telecom Infrastructure Blocks. Just as a composer creates a musical masterpiece, Dell’s engineers integrate, test and validate the Telecom Infrastructure Blocks to address specific 5G Core and vRAN/Open RAN (ORAN) workload requirements.

The Maestro and the Wand: Dell Telecom Infrastructure Blocks

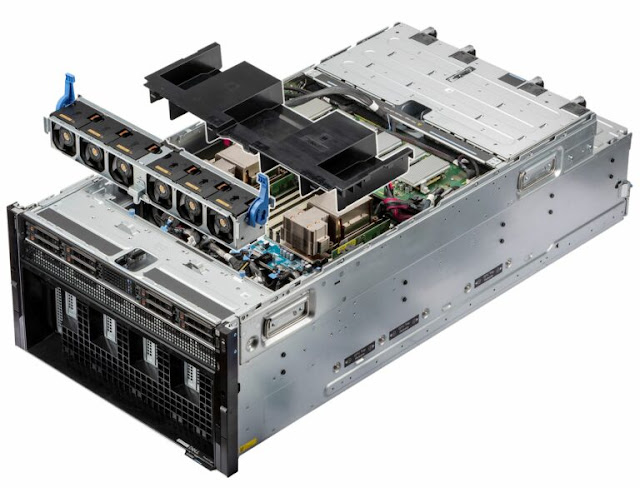

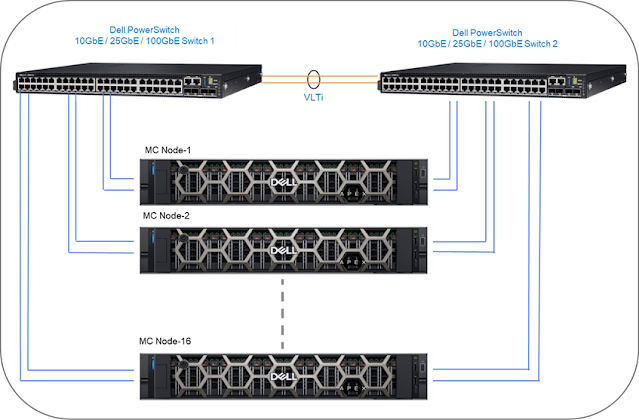

This is where the magic happens. Telecom Infrastructure Blocks are pre-validated, integrated and engineered telco multicloud foundation building blocks. They consist of Dell PowerEdge servers along with software licenses for Dell Bare Metal Orchestrator, Bare Metal Orchestrator Modules and our cloud platform partner’s software.

This maestro is the heart and soul of the operation, directing every telecom infrastructure component’s move. Trying to deploy a modern telco cloud without it is like an orchestra without a conductor—a disorganized mess. To ensure everything operates harmoniously, Bare Metal Orchestrator Modules integrate with the cloud software to deliver a seamless deployment and lifecycle management experience of the entire cloud stack. The declarative automation capabilities of Bare Metal Orchestrator make it possible for CSPs to simply define the desired outcome of their infrastructure environment, and it does the rest. Bare Metal Orchestrator then translates that outcome into the steps needed to compose and deploy all necessary elements of the stack. Picture the conductor waving a wand and the servers’ memory, networking, storage and processors working together to meet the unique telecom workload requirements, like well-trained musicians.

The Performance Stage: Dell Factory Integration

This is where factory integration of the Dell PowerEdge servers and cloud platform software takes place. This reduces the time and cost of infrastructure deployment while helping to accelerate the onboarding of new technology. Integration in the Dell factory also minimizes the potential for configuration errors. Think of it as the moment before the curtain rises, where the orchestra fine-tunes their instruments, ensuring everything is in perfect harmony.

The Orchestra: Dell PowerEdge Servers Taking the Stage

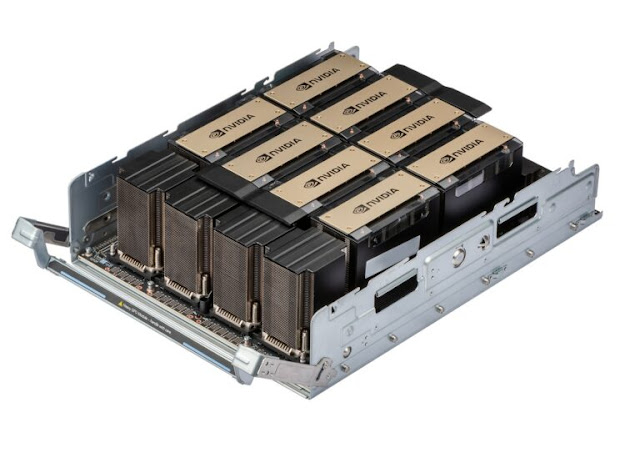

Now, let’s talk about the orchestra: the compute servers, which each take up a vital role in creating the symphony of telecom services. Like different sections of an orchestra, these servers handle memory, networking, storage and processors with acceleration capabilities.

◉ Memory. The strings section, providing a foundational base for the network’s performance.

◉ Networking. Think of it as the woodwinds section, facilitating the smooth flow of data and communication.

◉ Storage. The brass section, offering robust support and structure.

◉ 4th Generation Intel® Xeon® Processors. The percussionists, adding power and speed to the overall performance.

The Backstage Crew: Dell Services and Support Teams

In the world of telecom, Dell Services and Support Teams are the backstage crew of our orchestra. They ensure smooth performance and assistance when needed. Imagine the panic if a musician’s instrument breaks during a concert. Just as an instrument repair technician is on hand to support the orchestra, so is Dell’s support team. They stand at the ready to provide support in case of issues, guaranteeing the performance meets the highest telco standards, including rapid response times and service restoration.

The Concert: Bringing it all Together

Just as an orchestra relies on the conductor and sheet music to create beautiful music, CSPs can depend on Telecom Infrastructure Blocks for a well-orchestrated foundation. These building blocks streamline the design, configuration, lifecycle management and automation of the telecom cloud infrastructure, ensuring the right notes are played at the right time—consistently and efficiently.

◉ Simplify operations. Telecom Infrastructure Blocks are pre-integrated and validated systems that simplify operations for CSPs, minimizing integration requirements and automating manual tasks. They break down infrastructure silos and allow for the deployment of a flexible, cloud-native network without constraints.

◉ Reduce risks. Telecom Infrastructure Blocks ensure a consistent telco-grade deployment, or upgrade, of the cloud platform, reducing operational risks. Dell’s unified support model meets telecom industry standards, providing peace of mind.

◉ Increase agility. Telecom Infrastructure Blocks accelerate the introduction of new technology with continuous integration testing and validation. This can help CSPs reduce costs, improve customer experiences and meet business objectives.

Dell Telecom Infrastructure Blocks serve as the maestro, orchestrating the telecom network’s success. They ensure all the hardware and cloud software components play in perfect harmony, so CSPs can achieve optimal operational cost structures, meet stringent SLAs and quickly adopt and deploy new technologies. It’s the symphony of modernization, played with simplicity, efficiency, speed and confidence. Don’t leave your network without a conductor—choose Dell Telecom Infrastructure Blocks for a symphonic performance in the telecom world.

Source: dell.com