How do you turn your idea into a market-ready product or service? How do you move a prototype into production? How do you scale to mass production while maintaining high levels of customer support? If you’re an existing company, how do digitize your assets and develop a different customer model that provides new services and drives additional revenues? These are the burning questions that keep innovators and business leaders awake at night.

As the VP of the Dell Technologies OEM | Embedded & Edge Solutions Product Group, success, in my view, is down to the right partnership and division of labor. No one person can be an expert at everything. My advice is focus on what you do best – generating great ideas or running your core business – and recruit the right partners to help do the rest.

The Weir Group’s digital transformation is a classic case in point. An engineering company founded in the 1870s, Weir is now increasingly seen as an expert in both mechanical technology and analytics.

Of course, providing the best mining equipment continues to be at the heart of Weir’s offering but it is no longer the only differentiator. Weir’s enhanced value proposition is focused on process optimization and predictive maintenance, where the company can remotely monitor the performance of customer equipment and use this data to schedule preventative maintenance.

This approach also means that customers avoid costly, unscheduled downtime as well as gaining better insights about the likely timing of equipment replacements. You can read more about Weir’s digital transformation story here. In this blog, I want to share some personal insights as to what we did from an engineering perspective to help bring Weir’s vision to life, and share my top tips for successful digital transformation.

For starters, I personally loved the fact that every partner involved in the project contributed to the ultimate solution. What we designed together reflects not only Weir’s IP, but also our expertise and the expertise of our partners and suppliers. The solution really was bigger than the sum of its parts. Together, we developed something that none of us could have done alone.

That brings me to another important point. While Dell Technologies might be one of the biggest technology companies in the world, we know that we don’t always have all the answers. We’re happy to bring in other expertise as required, work with other partners and generally just do what’s right by the customer.

We all came to this project with open minds, no pre-conceptions and a willingness to listen and learn. We had to understand Weir’s business and what it was trying to achieve.

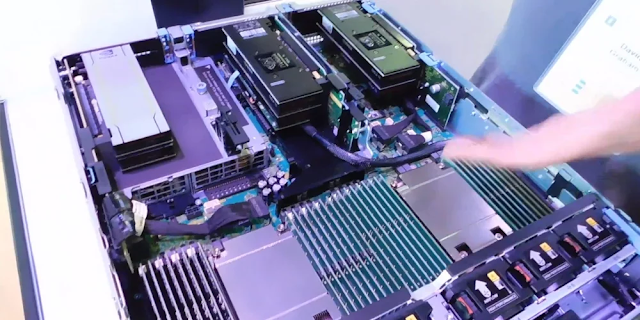

While Weir had developed an in-house prototype, we started by sitting down, discussing what was needed and working together on an architectural design. Apart from engineering services from my group, we also involved colleagues from Supply Chain, Operations, Materials, Planning, Customization, Operations and Dell Financial Services. We were there for the long haul, providing value at every step along the way. It was a real partnership from the outset.

It’s important to say that the entire process – from initial idea and proof of concept to the first production unit leaving the factory – took two years. That wasn’t because any partner was being slow or unresponsive; the reality is that figuring out how to make a production level, industrial-grade, top-quality solution takes time.

In many ways, we understood first-hand what Weir was trying to achieve. Many people still perceive Dell Technologies as a commercial, off-the shelf box provider. And, it’s true – we do that very well, but we have also evolved into a full services and solutions provider. So, we know all about setting a vision and working to transform what we do and how others perceive us.

For Weir, the good news is that production roll out is underway. As one of our most complex IoT projects to date, I believe that this new technology solution has the potential to transform Weir into a digital engineering solutions company, revolutionizing how the company serves its mining, oil and gas and infrastructure customers and opening up possibilities for new products and services.

Focus on what you do best

As the VP of the Dell Technologies OEM | Embedded & Edge Solutions Product Group, success, in my view, is down to the right partnership and division of labor. No one person can be an expert at everything. My advice is focus on what you do best – generating great ideas or running your core business – and recruit the right partners to help do the rest.

The Weir Group’s digital transformation is a classic case in point. An engineering company founded in the 1870s, Weir is now increasingly seen as an expert in both mechanical technology and analytics.

Weir’s new value proposition

Of course, providing the best mining equipment continues to be at the heart of Weir’s offering but it is no longer the only differentiator. Weir’s enhanced value proposition is focused on process optimization and predictive maintenance, where the company can remotely monitor the performance of customer equipment and use this data to schedule preventative maintenance.

Avoid downtime & gain new insights

This approach also means that customers avoid costly, unscheduled downtime as well as gaining better insights about the likely timing of equipment replacements. You can read more about Weir’s digital transformation story here. In this blog, I want to share some personal insights as to what we did from an engineering perspective to help bring Weir’s vision to life, and share my top tips for successful digital transformation.

Together is better

For starters, I personally loved the fact that every partner involved in the project contributed to the ultimate solution. What we designed together reflects not only Weir’s IP, but also our expertise and the expertise of our partners and suppliers. The solution really was bigger than the sum of its parts. Together, we developed something that none of us could have done alone.

The customer is all that matters

That brings me to another important point. While Dell Technologies might be one of the biggest technology companies in the world, we know that we don’t always have all the answers. We’re happy to bring in other expertise as required, work with other partners and generally just do what’s right by the customer.

Open minds

We all came to this project with open minds, no pre-conceptions and a willingness to listen and learn. We had to understand Weir’s business and what it was trying to achieve.

While Weir had developed an in-house prototype, we started by sitting down, discussing what was needed and working together on an architectural design. Apart from engineering services from my group, we also involved colleagues from Supply Chain, Operations, Materials, Planning, Customization, Operations and Dell Financial Services. We were there for the long haul, providing value at every step along the way. It was a real partnership from the outset.

Innovation takes time

It’s important to say that the entire process – from initial idea and proof of concept to the first production unit leaving the factory – took two years. That wasn’t because any partner was being slow or unresponsive; the reality is that figuring out how to make a production level, industrial-grade, top-quality solution takes time.

A shared understanding

In many ways, we understood first-hand what Weir was trying to achieve. Many people still perceive Dell Technologies as a commercial, off-the shelf box provider. And, it’s true – we do that very well, but we have also evolved into a full services and solutions provider. So, we know all about setting a vision and working to transform what we do and how others perceive us.

Transforming business models

For Weir, the good news is that production roll out is underway. As one of our most complex IoT projects to date, I believe that this new technology solution has the potential to transform Weir into a digital engineering solutions company, revolutionizing how the company serves its mining, oil and gas and infrastructure customers and opening up possibilities for new products and services.