As organizations work to meet the performance demands of new data-intensive workloads, accelerated computing is gaining momentum in mainstream data centers.

As data center operators struggle to stay ahead of a growing deluge of data while supporting new data-intensive applications, there’s a growing demand for systems that incorporate graphics processing units (GPUs). These accelerators, which complement the capabilities of CPUs at the heart of the system, use parallel processing to churn through large volumes of data at blazingly fast speeds.

For years, organizations have used accelerators to rev up graphically intensive applications, such as those for visualization, modeling and simulation. But today, the use cases for accelerators are growing far beyond the typical acceleration targets to more mainstream applications. In particular, accelerators can now be one of the keys to speeding up artificial intelligence, machine learning and deep learning applications, including both training and inferencing workloads, along with applications for predictive analytics, real-time analysis of data streaming in from the Internet of Things (IoT), and more. In fact, NVIDIA® GPUs can accelerate ~600 applications.

So what’s the big advantage of an accelerator? Here’s a quick look at how GPUs rev up performance by working with the CPU to take a divide-and-conquer approach to get results faster: A GPU typically has thousands of cores designed for efficient execution of mathematical functions. Portions of a workload are offloaded from the CPU to the GPU, while the remainder of the code runs on the CPU, improving overall application performance.

As data center operators struggle to stay ahead of a growing deluge of data while supporting new data-intensive applications, there’s a growing demand for systems that incorporate graphics processing units (GPUs). These accelerators, which complement the capabilities of CPUs at the heart of the system, use parallel processing to churn through large volumes of data at blazingly fast speeds.

For years, organizations have used accelerators to rev up graphically intensive applications, such as those for visualization, modeling and simulation. But today, the use cases for accelerators are growing far beyond the typical acceleration targets to more mainstream applications. In particular, accelerators can now be one of the keys to speeding up artificial intelligence, machine learning and deep learning applications, including both training and inferencing workloads, along with applications for predictive analytics, real-time analysis of data streaming in from the Internet of Things (IoT), and more. In fact, NVIDIA® GPUs can accelerate ~600 applications.

So what’s the big advantage of an accelerator? Here’s a quick look at how GPUs rev up performance by working with the CPU to take a divide-and-conquer approach to get results faster: A GPU typically has thousands of cores designed for efficient execution of mathematical functions. Portions of a workload are offloaded from the CPU to the GPU, while the remainder of the code runs on the CPU, improving overall application performance.

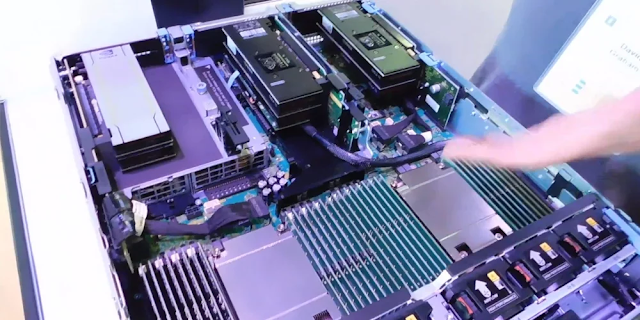

Accelerated servers from Dell EMC

If you’re just getting started with AI, machine or deep learning, or if you just want a screaming fast 2-CPU Tower server, check out the PowerEdge T640. You can put up to 4 GPUs inside this powerhouse, and it fits right under your desk! It has plenty of internal storage capacity with up to 32x 2.5” hard drives, and you can connect all your tech with those 8 PCIe slots. (I’m thinking that something like this could improve team online gaming performance.)

Beyond my personal wish-list, the PowerEdge T640 is rackable, and you can virtualize and share those GPUs with VMware vSphere, or with Bitfusion software to provide boosted virtual desktop infrastructure (VDI), artificial intelligence and/or other testing and development workspaces.

For those serious about databases and data analytics, check out the PowerEdge R940xa. The PowerEdge R940xa server combines 4 CPUs with 4 GPUs for a 1:1 ration to drive database acceleration. With up to 6TB of memory, this server is a beast that can grow with your data.

If you’re not sure what to pick, you can’t go wrong with the popular PowerEdge R740 (Intel) or PowerEdge R7425 (AMD) with 2 CPUs and up to 3 heavy-weight NVIDIA® V100 GPUs or 6 lightweight NVIDIA® T4 GPUs. In the PowerEdge R7425, you can make great use of all those x16 lanes. Why look at lanes? The vast majority of GPUs plug into a PCIe slot inside a server. PCIe communication/information is carried over the lanes in packets. More lanes means more data packets can travel at the same time, and the result can be faster.

Dive into the good-better-best options by workload in this new Dell EMC and NVIDIA ebook.

In addition to PowerEdge servers, Dell EMC has a growing portfolio of accelerated solutions. At a glance, Dell EMC Ready Solutions for HPC and AI make it faster and simpler for your organization to adopt high-performance computing. They offer a choice of flexible and scalable high performance computing solutions, with servers, networking, storage, solutions and services optimized together for use cases in a variety of industries.

The big point here is that your organization now has ready access to the accelerated-optimized and pre-integrated solutions you need to power your most data-intensive and performance-hungry workloads. So let’s get started!

0 comments:

Post a Comment