Over the past several years, there has been a growing interest in the use of accelerators on standard servers to improve workload performance. It started with GPUs to accelerate AI/ML and now includes FPGAs, SMART-NICs on servers and other low-power embedded accelerators in end-devices for data analytics, inferencing and machine learning. In this blog, we share our perspective on these new classes of emerging accelerators and the role they will play in the growing adoption of IoT and 5G as workloads get distributed from edge to data center to cloud.

The Data Decade will see transformation of the compute landscape and proliferation of acceleration technologies

With the exponential growth of data, an increasing number of IoT devices at the edge, and every industry going through digital transformation, the future of computing is driven by the need to process data cost-effectively, maximize business value and deliver a return on investment. The Data Decade is leading to architectures that process data close to the source of data generation and only send information over long-haul networks that requires storage or higher-level analysis. Emerging use cases around autonomous vehicles (self-driving cars, drones), smart-city projects, and smart factories (robots, mission critical equipment control) require data processing and decision making closer to the point of data generation due to mission critical, low-latency and near-real time requirements of these deployments.

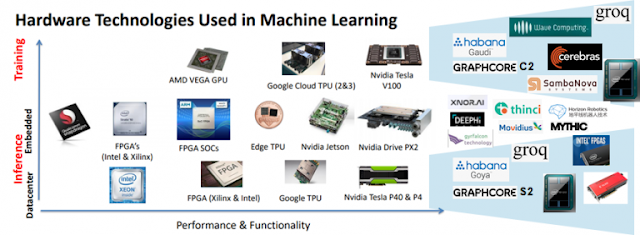

Edge computing architectures are emerging with compute infrastructure and applications distributed across edge to cloud. This trend will lead to a range of compute architectures optimized along different vectors – massively parallel floating-point compute capability in the data center to train complex neural network models (where power is not a concern) to highly power-efficient devices that can infer the deployed neural network models at the edge. This leads to a Cambrian explosion of devices that will be used as part of this cloud-to-edge continuum.

The Data Decade will see transformation of the compute landscape and proliferation of acceleration technologies

With the exponential growth of data, an increasing number of IoT devices at the edge, and every industry going through digital transformation, the future of computing is driven by the need to process data cost-effectively, maximize business value and deliver a return on investment. The Data Decade is leading to architectures that process data close to the source of data generation and only send information over long-haul networks that requires storage or higher-level analysis. Emerging use cases around autonomous vehicles (self-driving cars, drones), smart-city projects, and smart factories (robots, mission critical equipment control) require data processing and decision making closer to the point of data generation due to mission critical, low-latency and near-real time requirements of these deployments.

Edge computing architectures are emerging with compute infrastructure and applications distributed across edge to cloud. This trend will lead to a range of compute architectures optimized along different vectors – massively parallel floating-point compute capability in the data center to train complex neural network models (where power is not a concern) to highly power-efficient devices that can infer the deployed neural network models at the edge. This leads to a Cambrian explosion of devices that will be used as part of this cloud-to-edge continuum.

On the processor front, in the last ten to fifteen years traditional CPU architectures have evolved to an increasing number of cores and memory, but I/O and memory bandwidth hasn’t kept pace. Scaling memory and I/O bandwidth is critical for processing massive datasets in the data center and real-time streaming at the edge. These factors are leading to the evolution of hardware acceleration in both networking and storage devices to optimize dataflow across CPU, memory and IO subsystems at the overall system level. The growth in data processing has led to use of dedicated accelerators for Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL) workloads. These accelerators perform parallel computation and faster execution of AI jobs compared to traditional CPU architectures. They provide dedicated support for efficient execution of matrix math which dominates ML/DL workloads. Multiple numerical precision modes beyond what is available in CPUs (BFLOAT16, Mixed Precision Floating Point) are available to massively speedup a broad spectrum of AI applications.

Below, we take a look at five key areas where accelerators will play a pivotal role. Accelerator technologies play a key role in the rollout of 5G technology and associated services (which include AI, AR/VR and content sharing among others) at every stage of the compute spectrum.

1. AI Workloads / Solutions: In addition to the traditional players for machine learning ASICs (namely Nvidia, Intel and Google), startups are emerging with focus on higher performance, lower power and specific application areas. Some examples are Graphcore, Groq, Hailo Technologies, Wave Computing and Quadric. They are driving optimizations for specific areas such as natural language processing, AR/VR, speech recognition and computer vision. Some of these AI ASICs are focusing on inferencing and getting integrated into end-devices such as autonomous vehicles, cameras, robotics and drones to drive real-time data processing and decision making.

2. 5G Access Edge: The evolution of cellular networks to 5G is resulting in the virtualization and disaggregation of Telco Radio Access Network (RAN) architecture. High-frequency 5G wireless spectrum, distance limitations and increasing cell densities require a centralized RAN architecture where radio signals from multiple cell stations are processed at a centralized location. 3GPP and ORAN industry standards group are defining the architecture and specifications to ensure interoperability across RAN vendors. Centralized RAN processing unit uses standard off-the-shelf servers and virtualization techniques along with accelerators to decouple control plane and data plane processing. Control plane runs on a virtualized server called CU (Central Unit) and data plane processing is done on a standard server (called DU or Distributed Unit) with radio packet processing offloaded to an accelerator card. These accelerator cards are typically an FPGA or a custom ASIC with Time-Sensitive Networking (TSN) and other packet processing capabilities. Software companies like Radisys and Altiostar are delivering RAN control plane software that offloads radio frame processing to accelerator cards.

3. 5G Network Edge: The high speed, low latency and increased number of device connections supported by 5G networks are leading to a new set of use cases around AR/VR, gaming, content delivery and content sharing. This requires moving third party applications to the network edge along with network slicing capabilities to distinguish between different types of traffic and associated Service Level Agreements (SLAs). This transforms the telco network edge to become a distributed cloud running third party developer and service provider applications. Moving workloads to the network edge also requires the underlying network and storage infrastructure services to ensure secured network and data storage. Telco VNFs (e.g. EPC, BNG) and network services (e.g. firewalls, load balancers, IPSEC) are moving to the network edge to enable hosting of new workloads. In order to free up the server CPU Cores for third party apps and deliver advanced network security functions (e.g. Deep packet inspection, encryption, network slicing, analytics), the underlying network infrastructure services are being offloaded to accelerator cards called SMART-NICs. These SMART-NICs contain standard NIC functionality coupled with low-power CPU Cores and hardware acceleration blocks for network processing. They also provide a programmable data plane interface for Telco VNFs to offload network data-plane processing in hardware. The 3GPP industry standards group is defining architecture and standards for CUPS (Control Plane and User Plane Separation). Many network adapter companies like Intel, Mellanox, Broadcom, Netronome and emerging startups are focusing on these network acceleration adapters. There are also custom accelerator cards emerging to specifically focus on content caching and video analytics (Gaming, AR/VR).

4. IoT / Edge Computing: IoT is driving server-class computing to move closer to end-devices. Companies of all sizes are undergoing digital transformation to automate their operations and better serve their customers. This is resulting in hybrid/multi-cloud deployments closer to their end-users (customer, employees or devices) either on-prem or at a co-location facility. Similar to the network edge, the accelerators (FPGAs, SMART-NICs, GPUs) play a key role here for acceleration of infrastructure services and for accelerating data processing for AI/ML.

5. Centralized Data Center / Cloud: Most edge deployments consist of a hybrid / multi-cloud environment where processing is done as a combination of near-real-time processing at the edge and backend processing in a centralized data center or public cloud. A centralized data center (or public cloud) hosts the infrastructure for deep learning, storage and data processing. The deep learning infrastructure is making increasing use of higher-end GPUs and emerging high-performance deep learning ASICs. With increasing network speeds (50G/100G and beyond), persistent memory, high-performance NVMe drives and increasing security requirements (encryption, compression, deep packet inspection, network analytics), the network services are using accelerators to offload network data-plane functions in hardware. This enables all these functions at wire-speed and service-chaining of network services. Software-defined storage stacks are also evolving to make use of accelerators for advanced functions like dedup, erasure coding, compression, encryption and to scale to deliver the higher performance with persistent memory, NVMe drives and high-performance networks.

In addition to the above opportunities around accelerators, there is an increasing amount of data being stored on the storage systems. In order to provide intelligent access to data, future storage devices are evolving to be programmable, where FPGA and other hardware acceleration techniques are embedded in the drive subsystem to analyze the data in-place and only provide the result to the application. These drives will have capabilities to run third party code and are commonly referred to as computational storage. It optimizes the data transfer over the network, where large videos and images can be analyzed, and database queries can be performed right where data is stored. Large storage systems are also embedding accelerators, virtualization and cloud-native frameworks in the storage system to process data and host third party analytics applications.

This use of hardware acceleration for machine learning, network services and storage services is just the beginning of a change in system-level architecture. The next evolution will drive more optimized data-flows across various accelerators so that data can flow between network, storage and GPUs directly without involving the host x86 CPUs and host memory. This will become increasingly important in future dis-aggregated and composable server architectures wherein a logical server is composed from independent pools of CPUs, memory, network adapters, disk drives, and GPUs connected with a high-performance fabric.

Research is underway to enhance machine learning accelerators for capabilities like reinforcement learning and explainable AI. Future ML accelerators will support capabilities to enable localized training at the edge to further improve decision making for localized data sets. Accelerators at the edge also need to account for environmental conditions at the deployment location. Many edge deployments need ruggedized infrastructure as it is either deployed in outside environment (street side, parking lot or outside a building) or in a warehouse environment (e.g. retail, factory). The power, thermal and form factor requirements need to be considered to build the ruggedized infrastructure containing accelerators for edge deployments.

The companies that innovate in this system-level architecture for next generation workloads will win in driving customers towards digital transformation, edge and 5G. At Dell EMC, we are heavily focused on this evolving use of accelerators, ruggedized IT infrastructure and system level optimizations.

0 comments:

Post a Comment