Increasingly, engineers and infrastructure administrators are looking for workload agility. But what exactly is workload agility? First, let’s define workload. Workloads are the computing tasks that employees initiate on systems to complete their companies’ missions. Precision Medicine, Semiconductor Design, Digital Pathology, Connected Mobility, Quantitative Research, and Autonomous Driving are all examples of compute-intensive workloads that are typically deployed on computing infrastructure. “Workload agility,” then, refers to the ability to seamlessly spin up resources to complete any of your companies required compute intensive tasks.

In an ideal world, dedicated and siloed infrastructure is replaced with agile, on-demand infrastructure that automatically reconfigures itself to meet diverse, constantly changing workload requirements. This delivers increased hardware utilization, lower cost, and most importantly, shorter time to market. Since dedicated infrastructure can be cost prohibitive, establishing workload agility by eliminating silos within the data center while dynamically provisioning compute resources for the pending workload helps maximize investment ROI, hardware utilization, and ultimately, fulfill the organization’s mission.

Workloads in most enterprises are asymmetric, requiring GPUs, FPGAs, and/or CPUs in various compute states. Some workloads rely on extensive troves of data. Other workloads, including Autonomous Driving, run through phases where compute requirements change drastically. The ideal solution for users enables them to schedule tasks seamlessly — without disrupting their co-workers’ jobs — all the while changing compute requirements as needed. The ideal solution for IT administrators delivers complete flexibility, with infrastructure 100 percent utilized to maximize ROI securely and with full traceability.

The 4th Industrial Revolution, as categorized by the World Economic Forum, is the blurring of lines between physical, digital, and biological systems. Integral to blurring the lines is the increasingly pervasive “As-A-Service” model. The desired outcome of this model is to bring agility (and performance) to workloads independent of the underlying hardware. This delivers a user-experience where no understanding of the formal “plumbing” of the infrastructure is required. Whether performing hardware-in-the-loop testing for Autonomous Driving or analyzing pharmacogenomics in Precision medicine, the underlying hardware simply works with high performance. The abstraction of the hardware and infrastructure means that the user interface is all the user needs to understand.

Key to the blurring of lines is allowing a wide variety of jobs to be performed on-demand – seamlessly and with high performance – regardless of where the actual infrastructure and data is located. To achieve this, on-premises clouds are comprised of a handful of components, including:

◉ A User Interface: The user interface makes the digital become real. Bright Computing, for example, provides a user interface which is in use today by many Dell customers, and is a core component of Dell EMC Ready Solutions.

◉ A Scheduler: The job scheduler is responsible for queuing jobs on the infrastructure and must work in harmony with provisioning and the user interface to assure the right hardware is available at the right time for each job.

◉ Stateless to stateful storage management: A secure way to navigate stateless containers in a stateful storage world is required.

◉ Storage provisioner: Lastly is the ability to do the actual provisioning and de-provisioning of storage on demand via Container Storage Interfaces (CSI).

Comprehensive automation and standardization are key to taking the complexity out of provisioning, monitoring and managing clustered IT infrastructure. As an example, automation that builds on-premise and hybrid cloud computing infrastructure that simultaneously hosts different types of workloads while dynamically sharing system resources between them is an area of focus for Bright Computing. With Bright, your computing infrastructure is provisioned from bare metal: networking, security, DNS and user directories are set-up, workload schedulers for HPC and Kubernetes (K8s) are installed and configured, and a single interface for monitoring overall system resource utilization and health is provided – all automatically. An intuitive HTML5 GUI provides a comprehensive management console, complete with usage and system reports, and a powerful command line interface for batch programming, bringing simplicity to flexible infrastructure management. Extending your environment automatically to AWS, Azure and hybrid clouds is also provided — all for the ultimate in workload agility – a dynamically managed, seamless hybrid cloud.

One of the most significant challenges of a truly dynamic and flexible on-demand computing environment is sharing resources between jobs that are under the control of different workload managers. For example, if you want to run HPC jobs under Slurm or PBS Pro and containers on the same shared infrastructure under K8s, you have a problem: Slurm and PBS Pro do not coordinate resource utilization between each other, much less with K8s. As a result, resources must be split manually — which creates silos that need to be managed separately. Application resource limitations become common and add to the management headache.

Bright software solves this issue with a solution that monitors the demand and capacity of resources assigned across workload managers, and dynamically re-assigns resources between them based on policy. Likewise, it also adds cloud resources to on-premise infrastructure as they become available for some or all workload managers and their jobs.

In an ideal world, dedicated and siloed infrastructure is replaced with agile, on-demand infrastructure that automatically reconfigures itself to meet diverse, constantly changing workload requirements. This delivers increased hardware utilization, lower cost, and most importantly, shorter time to market. Since dedicated infrastructure can be cost prohibitive, establishing workload agility by eliminating silos within the data center while dynamically provisioning compute resources for the pending workload helps maximize investment ROI, hardware utilization, and ultimately, fulfill the organization’s mission.

Workloads in most enterprises are asymmetric, requiring GPUs, FPGAs, and/or CPUs in various compute states. Some workloads rely on extensive troves of data. Other workloads, including Autonomous Driving, run through phases where compute requirements change drastically. The ideal solution for users enables them to schedule tasks seamlessly — without disrupting their co-workers’ jobs — all the while changing compute requirements as needed. The ideal solution for IT administrators delivers complete flexibility, with infrastructure 100 percent utilized to maximize ROI securely and with full traceability.

Time for a Revolution?

The 4th Industrial Revolution, as categorized by the World Economic Forum, is the blurring of lines between physical, digital, and biological systems. Integral to blurring the lines is the increasingly pervasive “As-A-Service” model. The desired outcome of this model is to bring agility (and performance) to workloads independent of the underlying hardware. This delivers a user-experience where no understanding of the formal “plumbing” of the infrastructure is required. Whether performing hardware-in-the-loop testing for Autonomous Driving or analyzing pharmacogenomics in Precision medicine, the underlying hardware simply works with high performance. The abstraction of the hardware and infrastructure means that the user interface is all the user needs to understand.

Data Center Clouds?

Key to the blurring of lines is allowing a wide variety of jobs to be performed on-demand – seamlessly and with high performance – regardless of where the actual infrastructure and data is located. To achieve this, on-premises clouds are comprised of a handful of components, including:

◉ A User Interface: The user interface makes the digital become real. Bright Computing, for example, provides a user interface which is in use today by many Dell customers, and is a core component of Dell EMC Ready Solutions.

◉ A Scheduler: The job scheduler is responsible for queuing jobs on the infrastructure and must work in harmony with provisioning and the user interface to assure the right hardware is available at the right time for each job.

◉ Stateless to stateful storage management: A secure way to navigate stateless containers in a stateful storage world is required.

◉ Storage provisioner: Lastly is the ability to do the actual provisioning and de-provisioning of storage on demand via Container Storage Interfaces (CSI).

The Cloud Window to the World

Comprehensive automation and standardization are key to taking the complexity out of provisioning, monitoring and managing clustered IT infrastructure. As an example, automation that builds on-premise and hybrid cloud computing infrastructure that simultaneously hosts different types of workloads while dynamically sharing system resources between them is an area of focus for Bright Computing. With Bright, your computing infrastructure is provisioned from bare metal: networking, security, DNS and user directories are set-up, workload schedulers for HPC and Kubernetes (K8s) are installed and configured, and a single interface for monitoring overall system resource utilization and health is provided – all automatically. An intuitive HTML5 GUI provides a comprehensive management console, complete with usage and system reports, and a powerful command line interface for batch programming, bringing simplicity to flexible infrastructure management. Extending your environment automatically to AWS, Azure and hybrid clouds is also provided — all for the ultimate in workload agility – a dynamically managed, seamless hybrid cloud.

Scheduled for Success

One of the most significant challenges of a truly dynamic and flexible on-demand computing environment is sharing resources between jobs that are under the control of different workload managers. For example, if you want to run HPC jobs under Slurm or PBS Pro and containers on the same shared infrastructure under K8s, you have a problem: Slurm and PBS Pro do not coordinate resource utilization between each other, much less with K8s. As a result, resources must be split manually — which creates silos that need to be managed separately. Application resource limitations become common and add to the management headache.

Bright software solves this issue with a solution that monitors the demand and capacity of resources assigned across workload managers, and dynamically re-assigns resources between them based on policy. Likewise, it also adds cloud resources to on-premise infrastructure as they become available for some or all workload managers and their jobs.

How to Schedule?

Schedulers are a critical element of an agile infrastructure. Schedulers tend to be vertical specific. Some industries love K8s. Others are very Slurm or PBS Pro centric. With the recent advent of the K8s for workload movement, workload agility, and virtualized computing, we will focus on this scheduler. It is important, however, that your solution supports multiple schedulers. Bright Computing, for example, works with all the major schedulers as mentioned above and can handle them all in tandem.

K8s focuses on bringing order to the container universe. Containers of many different flavors are supported by K8s. Docker being the most common. Containers control the resources of the hosts making the sharing of resources possible. K8s orchestrates the containers amongst the hosts to share the resources seamlessly. The advent of Nvidia’s GPU Cloud catalog of AI containers, DockerHub, and other sharing vehicles for workload containers has certainly accelerated container usage for quite a few different workloads. The ability to centralize images, manage resources, and increase repeatability of infrastructure is the story of the rise of containers and the rise of K8s. As such, the in-cloud and on-premises capability of scheduling jobs with K8s is one way we are achieving workload agility.

A Clever Containment Strategy

Helping K8s to make order of infrastructure is the job of the Container Storage Interface (CSI). The CSI is a standard K8s plugin that helps bring authentication, transparency, and auditing to stateful storage as it’s used by stateless containers. The beauty of the CSI and K8s when combined is that they provide a mechanism to leverage existing infrastructure in an agile way. Dell Technologies recently released an Isilon CSI for K8s. See the link below.

The Sky’s the Limit

K8s is becoming commonplace and Bright Computing makes deploying and managing K8s easier. Workloads can leverage any combination of available FPGA, GPU or CPU compute resources – whether on public or on-prem clouds; applications are made portable and secured within containers with K8s managing workload movement; and centralized and secured storage is available and accessed via application-native protocols, including SMB and NFS. Its single user interface makes deployment and overall management a snap.

All Together

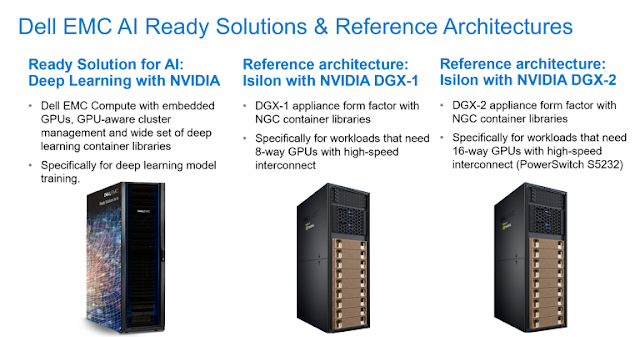

Today the partnership of Dell Technologies and Bright Computing offers a seamless way to effectively schedule jobs, helping eliminate data center silos and leading to workload agility. We have a number of offerings in market today, ranging from reference architectures to Ready Solutions such as HPC Ready Solution and AI Ready Solution. These solutions are also assembled regularly for customer workloads like Hardware in Loop (HIL) / Software in Loop (SIL) / Simulation / Re-Simulation for Automotive Autonomous Driving.

0 comments:

Post a Comment