There are now 14 reasons why single socket servers could rule the future. I published a paper last April on The Next Platform entitled Why Single Socket Servers could rule the future, and thought I’d provide an updated view as new products have come to market and we have heard from many customers on this journey.

1. More than enough cores per socket and trending higher

2. Replacement of underutilized 2S servers

3. Easier to hit binary channels of memory, and thus binary memory boundaries (128, 256, 512…)

4. Lower cost for resiliency clustering (less CPUs/memory….)

5. Better software licensing cost for some models

6. Avoid NUMA performance hit – IO and Memory

7. Power density smearing in data center to avoid hot spots

8. Repurpose NUMA pins for more channels: DDRx or PCIe or future buses (CxL, Gen-Z)

9. Enables better NVMe direct drive connect without PCIe Switches (ok I’m cheating to get to 10 as this is resultant of #8)

10. Gartner agrees and did a paper.

Since this original article, I’ve had a lot of conversations with customers and gained some additional insights. Plus, we now have a rich single socket processor that can enable these tenets: AMD’s second-generation EPYC processor codenamed ROME.

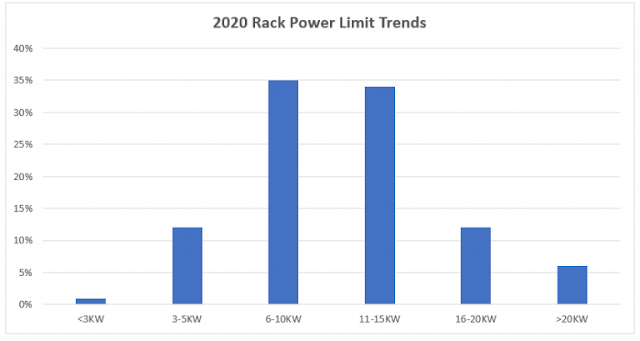

So what else have we learned? First, from a customer perspective, rack power limits today are fundamentally not changing – or at least not changing very fast. From a worldwide perspective surveying customers, rack power trends are shown below:

The original top 10 list is shown below:

1. More than enough cores per socket and trending higher

2. Replacement of underutilized 2S servers

3. Easier to hit binary channels of memory, and thus binary memory boundaries (128, 256, 512…)

4. Lower cost for resiliency clustering (less CPUs/memory….)

5. Better software licensing cost for some models

6. Avoid NUMA performance hit – IO and Memory

7. Power density smearing in data center to avoid hot spots

8. Repurpose NUMA pins for more channels: DDRx or PCIe or future buses (CxL, Gen-Z)

9. Enables better NVMe direct drive connect without PCIe Switches (ok I’m cheating to get to 10 as this is resultant of #8)

10. Gartner agrees and did a paper.

Since this original article, I’ve had a lot of conversations with customers and gained some additional insights. Plus, we now have a rich single socket processor that can enable these tenets: AMD’s second-generation EPYC processor codenamed ROME.

So what else have we learned? First, from a customer perspective, rack power limits today are fundamentally not changing – or at least not changing very fast. From a worldwide perspective surveying customers, rack power trends are shown below:

These numbers are alarming when you consider the direction of CPUs & GPUs that are pushing 300 Watts and beyond in the future. While not everyone adopts the highest end CPU/GPUs, when these devices shift toward higher power, that pulls the sweet spot power up due to normal distribution. Then factor in direction of DDR5 and number of DDR channels, PCIe Gen4/5 and number of lanes, 100G+ Ethernet, and increasing NVMe adoption, and the rack power problem is back with gusto. Customers are facing some critical decisions: (1) accept the future server power rise and cut the number of servers per rack or (2) shift to lower power servers to keep server node count or (3) increase data center rack power and accompanying cooling or (4) move to a colo or the public cloud – that alone won’t address the rack power problem brewing as they too have to deal with the growing rack power problem. With the rise in computational demand driven by data and enabled by AI/ML/DL this situation is not going to get better. Adoption of 1U and 2U single socket servers can greatly reduce the per-node power and thus help take pressure off the rack power problem.

Power problems don’t just impact the data center, they are present at the edge. As more data is created at the edge by the ever-increasing number of IoT and IIoT devices, we will need capable computing to analyze and filter the data before results are sent to the DC. For all the reasons in the paragraphs above and below, edge computers will benefit from rich single socket servers. These servers will need to be highly power efficient, provide the performance required to handle the data in real-time, and, in some cases, support Domain Specific Architectures (DSA) like GPUs, FPGAs, and AI accelerators to handle workloads associated with IoT/IIoT. These workloads include data collection, data sorting, data filtering, data analytics, control systems for manufacturing, and AI/ML/DL. The most popular edge servers will differ from their DC counterparts by being smaller. In many situations, edge servers also need to be ruggedized to operate in extended temperature and harsh environmental conditions. Data center servers typically support max 25-35C temperature range. While edge servers need to be designed to operate in warehouse and factory environments (25-55C max temperature) and harsh environments (55-70C max temperature). When you reduce the compute complex from 2 processors and 24-32 DIMMs to 1 processor with 12-16 DIMMs then you can reinvent what a server looks like and meet the needs of the edge.

Another interesting observation and concern brought up by customers is around overall platform cost. Over the last few years the CPU and DRAM pricing has grown. Many customers desire cost parity generation to generation and customers expect to get Y% higher performance – Moore’s Law at work. But as the CPUs grew in capability (cores and cost) they added more DDR channels which were needed to feed the additional cores. To get the best performance you must populate 1 DIMM per channel, which forced customers to install more memory. As the CPU prices rose with additional DRAM required, it broke the generation to generation cost parity aspect. In comes the rich 1-socket server and now at the system level you can buy less DIMMs and CPUs – saving cost and power at the node level without having to trade-off performance.

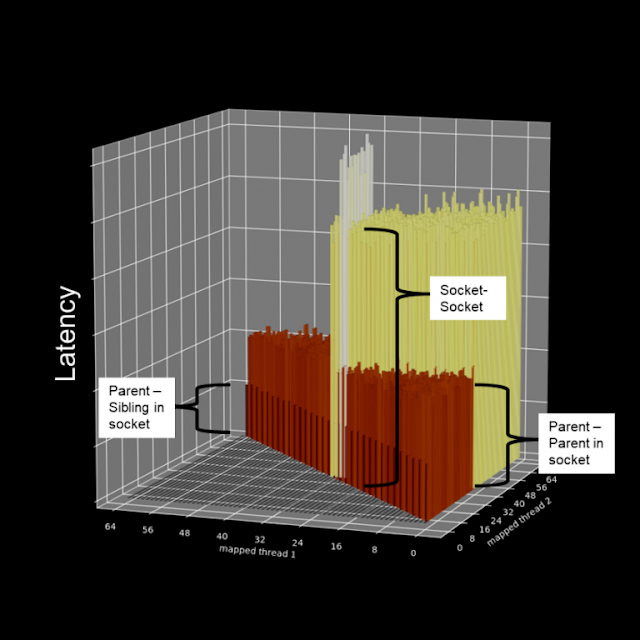

The last point customers have shared with me is around complexity reduction. Many said they had spent weeks chasing what was believed to be a networking issue when it was the 2-socket IO NUMA challenge I highlighted in the last paper. Those customers are coming back and letting us know. By adopting 1-socket servers, buyers are able to reduce application/workload complexity by not making IT and application developers an expert on IO and memory NUMA. In the last paper I showed the impact of IO NUMA on bandwidth and latency (up to 35% bandwidth degradation and 75% latency increase).

Below is a view of memory NUMA on a standard 2-socket server where we start with core0 and sweep across all cores measuring data sharing the latency. We then go to core1 and again sweep across all cores, and so on until all pairs of cores have been measured. The lowest bar is the L2/L1 sharing from a parent to its sibling HT core, the next level up is all cores within a socket sharing L3. Next level up is across sockets. And to be honest, the few that are the highest we haven’t concluded what is causing that yet – but I think you get the point – it’s complicated and can cause variability.

By going to single socket, IT admins and developers can ignore having to become experts on affinity mapping, application pinning to hot cores, NUMA control, etc., which leads to complexity reduction across the board. At the end of the day, this helps enable application determinism which is becoming critical in the software defined data center for things like SDS, SDN, Edge Computing, CDN, NFV, and so on.

So, what new advantages of 1-socket servers have we uncovered?

1. Avoid (or delay) the rack power challenges that are looming, which could reduce the number of servers per rack.

2. Prepare for your Edge Computing needs.

3. Better server cost structure to enable parity generation to generation.

4. Complexity reduction by not making IT admins and applications developers experts on IO and memory NUMA while saving the networking admin from chasing ghosts.

To better support you on your digital transformation journey, we updated our PowerEdge portfolio of 1-socket optimized servers using the latest and greatest features in the AMD ROME CPU. PowerEdge R6515 Rack Server and the PowerEdge R7515 Rack Server as shown below.

0 comments:

Post a Comment