Ensuring the stability and reliability of servers is critical in today’s digital-focused business landscape. However, the complexity of modern infrastructures and the unpredictability of real-world scenarios pose a significant challenge to engineers. Imagine a critical system for sales crashing unexpectedly due to a surge in user traffic, leaving customers stranded and business teams reeling from the aftermath.

The traditional performance testing on servers often falls short of uncovering vulnerabilities lurking deep within complex infrastructures. While server tasks may perform optimally under controlled testing conditions, they may fail when faced with the unpredictability of everyday operations. Sudden spikes in user activity, network failures or software glitches can trigger system outages, resulting in downtime, revenue loss and damage to brand reputation.

Embracing the Problem as a Solution

This is where the Database Resiliency Engineering product emerges as an unconventional solution, offering a proactive approach to identifying weaknesses and mitigating vulnerabilities for Dell’s production and non-production servers via chaos experiments tailored for database systems. Much like stress-testing a bridge to ensure it can withstand the weight of heavy traffic, the experiment deliberately exposes servers to controlled instances of chaos, simulating abnormal conditions and outage scenarios to understand their strengths and vulnerabilities.

Imagine a scenario where a bridge is constructed without undergoing stress-testing. Everything seems fine during normal use, until one day an unusually heavy load, such as a convoy of trucks or a sudden natural disaster puts immense pressure on the bridge. Its hidden structural weaknesses become apparent. Luckily for us, most infrastructure undergoes rigorous testing before being open to the public. Similarly, Dell’s Chaos Experiment tool allows us to test the boundaries of our server, allowing us to identify potential weaknesses and reinforce critical areas proactively.

Calculated Approach to Unleashing Chaos

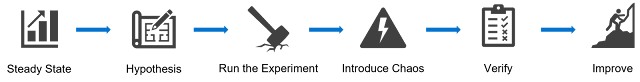

Performing a chaos experiment is not as simple as unleashing mayhem on our systems and watching it unfold. The goal is to fortify our systems with iterative improvements that take a multi-step approach starting with a defined hypothesis, carefully executed chaos scenarios and a comprehensive improvement plan based on server responses.

Each test begins with understanding the server’s steady state, its baseline performance under optimal conditions. This is our starting point, providing a reference against which we measure the impacts of the experiments. Our database engineers will then develop hypotheses about potential weak spots, which serve as the guide to the various attacks they will perform on the server. With a click of a button, the tool introduces the selected disruptions on the server as our monitors carefully track its response every step of the way.

Chaos takes many forms—from resource consumption to network disruptions. The tool allows us to manipulate these variables, simulating real-world chaos scenarios. While the experiment is running, we keep an eye on the system behavior as we track the monitors in place, review the incoming logs and take note of any deviations from the expected. With these insights, we can form improvement plans for enhancing system resilience, optimizing server resource allocation and fortifying against potential vulnerabilities.

Understanding Chaos’s Many Faces

The tool enables us to perform three different types of experiments, each of which allow us to alter different variables or situations:

◉ Resource consumption. The number of resources that are utilized when running an operation impacts a server’s performance. By intentionally increasing resource consumption, such as memory or CPU utilization, we can test the performance and responsiveness of critical processes. Ramping up CPU utilization may lead to increased processing times for requests, while elevated memory usage could result in slower data retrieval or system crashes.

◉ System states. Just as the weather outside can change in an instant, our servers can experience sudden changes in the system environment causing unexpected behaviors. A Time Travel test alters the clock time on servers, disrupting scheduled tasks or triggering unwanted processes. A Process Killer experiment overloads targeted processes with repeated signals, simulating scenarios where certain processes become unresponsive or fail under stress.

◉ Network conditions. Stable communication between components is vital for server operations to perform optimally. Altering network conditions allows us to learn how the system responds to different communication challenges. A Blackhole test deliberately shuts off communications between components, simulating network failures or isolation scenarios. A Latency test introduces delays between components, mimicking high network congestion or degraded connectivity.

Building Resilience in a World of Uncertainties

The continuous cycle of testing, discovering and enhancing enables us to iteratively improve our capacity to withstand potential disruptions with each experiment. By addressing infrastructure vulnerabilities before they escalate into costly incidents, we prevent millions in potential revenue loss and allow our team members to focus more time on modernization activities instead of incident resolution tasks. Moreover, it instills confidence in our teams knowing their infrastructure has been tried and tested for any challenges that may arise.

Embracing chaos as a solution underscores our understanding that chaos is not the end, but a means to achieving a stronger, more resilient infrastructure environment. Instead of reacting to the world’s unpredictability, we are bolstering our ability to adapt and thrive in an ever-evolving digital landscape.

Source: dell.com