Wherever you go, wherever you look, business is expanding to the edge. Retail, healthcare, agriculture, media and entertainment, manufacturing—every vertical is responding to business needs to lower costs and enable personalized experiences and products, fast. On the manufacturing floor, for instance, edge computing is now essential for process flow control, computer vision safety monitoring, supply management, quality assurance, predictive maintenance and more.

Industry analysts are taking note of the massive numbers of devices and associated volumes of data coming into play, suggesting that the majority of enterprise-generated data in the coming years will be created and processed outside data center or public cloud environments.

None of this is to say that traditional data centers are becoming irrelevant. Those will remain an essential strategic centerpiece of the end-to-end enterprise IT environment. What’s different is that many targeted data processing and analytics functions will move from the data center to the edge, where data that has immediate is generated. This allows for near real-time analysis and response, without suffering the latency and data transfer costs in the legacy centralized approach.

Having all these environments in play can raise a lot of questions for IT. How do you decide where to run different workloads? Determining the best executive venue (BEV) for a workload depends on several considerations. In the view of the analysts at 451 Research, part of S&P Global Market Intelligence, the most influential factors that organizations consider when determining BEV include performance requirements, network latency, data volumes, broadband access, security issues, regulatory compliance issues and the relative costs of edge vs. cloud deployments.

“Some enterprise workloads, such as customer relationship management and enterprise resource planning systems, may be best run in a centralized data center, where they share data with each other,” notes Christian Renaud, Research Director and author of a special report commissioned by Dell Technologies and Intel. “Other workloads, such as predictive maintenance, patient monitoring and physical security, may be best placed at the edge to enable automation and real-time data-driven decisions.”

Sometimes the decision on workload placement is a simple one. 451 Research notes that today’s enterprises have many traditional business applications that are relatively latency-insensitive or are required by the vendor to be hosted on their infrastructure, such as SaaS applications. Such workloads will run in the core data center or on a public cloud. At the same time, enterprises have workloads that may be mission-critical and latency-sensitive, such as factory machine control or substation communications in an electric grid, which must be co-located near the device generating the data. These workloads will run at the edge. The same holds true for applications at remote locations that have limited—possibly unreliable—connectivity, such as oil rigs in the middle of the ocean. Computing at the edge becomes the default choice.

“These factors all come into play on a workload-by-workload basis in venue selection,” 451 Research says. “Security and cost, however, remain the most influential factors in venue selection by enterprises — even more so when the data in question is sensitive operational data.”

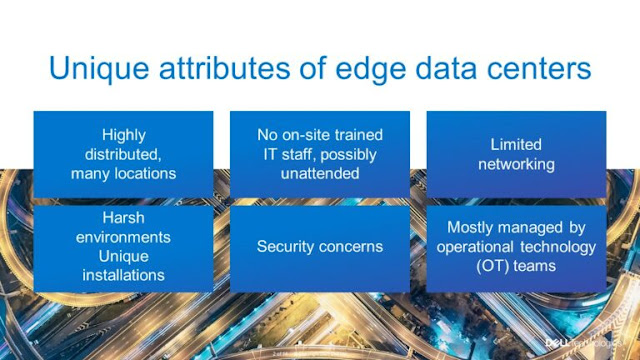

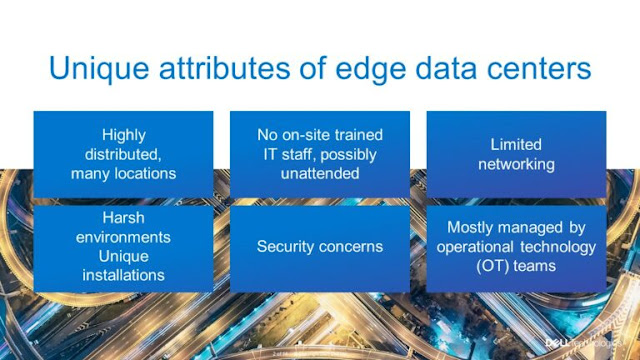

Sometime, too, the decision revolves around the unique attributes of edge data centers, as summarized in this Dell Technologies slide.

As this slide suggests, the best execution venue might be driven by the limitations and special requirements of edge data centers.

This all suggests that an enterprise needs to build a strategy that guides best execution venue in this new world, where compute at the edge becomes a necessity.

Two methods have been suggested to address those challenges.

“Cloud Out” – In this approach, the edge is perceived as an extension of the cloud, sharing similar operating environment, APIs and management. In this approach, typically promoted by hyperscale cloud providers, the main purpose of the edge IT environment is to deliver the collected data to a public cloud, with only essential processing performed at the edge.

“Edge Out” – In this approach, the center is the edge, and public clouds are targets where data with longer-term value can be stored, or where specific applications can be run. The determining software stack is an extension of the software stack designed for the edge OT environment.

Unfortunately, both these methods present challenges:

◉ The Cloud Out approach relies on a single public cloud, which limits data and workload mobility for the many organizations that leverage multiple public clouds.

◉ The Edge Out approach leverages an operating environment that was designed for OT and IoT environments, which cannot scale to larger environments, so enterprises that aspire to scale their edge-based solutions across processes and locations could find it limiting.

Moreover, both approaches ignore the core data center, which means that the edge environment run by the operational technology (OT) team is likely not aligned with existing IT architectures and processes. This exposes the organization to vulnerabilities and operational inefficiencies.

Fortunately, a third, balanced approach, Core to Edge, enables workload and data distribution between all three infrastructure environments– edge, core (including private cloud) and multiple public clouds. Because most of the enterprises still view their main data center as the core location of their applications and data and where their IT processes and policies were originated and implemented, it only makes sense to align the edge infrastructure to the core data center environment. Doing so enables IT and OT together to build a scalable, agile, private cloud-based infrastructure with consistent management and operations. Such alignment makes workload and data migration seamless to more readily respond to the needs of the business.

Ultimately, a balanced strategy takes a holistic approach to the edge-core-public cloud continuum and decisions related to best execution venue. In many cases, this balanced strategy must include integration with multiple clouds, because some workloads are better placed in one cloud than another.

At the same time, this new approach to placing workloads across a continuum will be unified by common management tools and policies that enable consistent orchestration, management and security, regardless of where a workload runs or data resides.

Key takeaways

When you’re determining the best executive venue for an enterprise workload, the CIO’s consideration isn’t a this-versus-that debate. It’s a this-plus-that exploration—one that considers both the specific characteristic of the workload and the unique attributes of computing at the edge, the core and the public cloud.

As 451 Research notes, “The range of applications and workloads across industries—each with unique cost, connectivity and performance characteristics—will mandate a continuum of compute and analysis at every step of the topology, from the edge to the cloud.”

Source: delltechnologies.com

0 comments:

Post a Comment