The world of analytics has been on a collision course with object storage for the last few years. In a recent conversation with a large financial company, we discussed the need to move to an object-based data lake to support the analytics stack.

Two major factors were being considered: First, the company is embracing cloud-native practices based off of S3 storage. Second, many of the analytics applications in the customer stack now support object protocols for storage. As the conversation continued, it became clear that in 2021, the analytics community is fully embracing object data lakes.

When I started in the analytics community, the Hadoop Distributed File System (HDFS) was king of data lakes. Object storage was growing, but it was not as widespread. Fast forward just a couple of years and the line between object- and file-based storage is blurred. Let’s walk through the three top trends accelerating object-based data lakes in the enterprise.

Analytics and microservices

One of the top trends in advancing object storage in analytics is the emergence of microservices for hybrid cloud. The hybrid cloud approach to software engineering is fundamentally changing the way applications are both built and run. Analytics applications are not exempt from this change and may benefit more than other applications. Flexibility on where analytics applications can be deployed gives data teams the ability to bring the services to data. The days of having to shift around PBs of data for analysis at high cost and lengthened development times are long gone.

Separation of compute and storage

The second trend in object storage for analytics is the need to decouple compute from storage. Data architects have made is clear that applications must offer the flexibility to separate compute from storage. The Hadoop community is adopting this shift with the release of open support for object through S3 and S3a protocols. The Splunk community has joined in the decoupling compute and storage movement with the 2018 announcement of Splunk SmartStore. SmartStore enables Splunk to keep the recently accessed and hot data close to the compute while tiering warm and cold data to S3 object store. Separating compute and storage drives the cost of analytics applications and helps build mutli-use data pipelines.

Analytics anywhere and everywhere

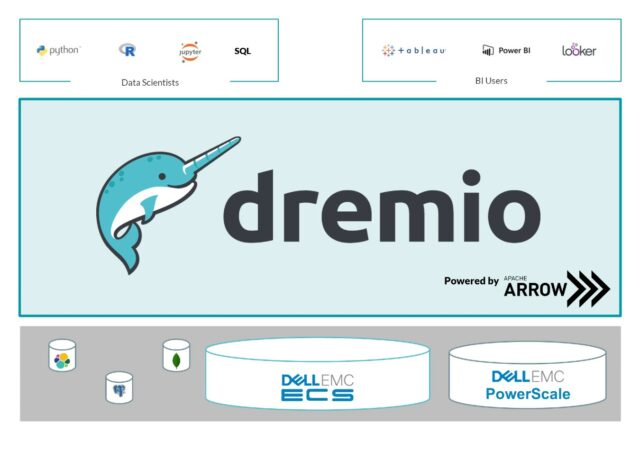

The final driver for object storage in analytics is the need for data teams to be able to analyze data anywhere and everywhere. No longer should complex file systems or applications be required for querying data. In a post schema-on-read world, Dremio, the cloud data lake query engine, delivers ultra-fast query speed and a self-service semantic layer operating directly against data lake storage. Dremio eliminates the need to copy and move data to proprietary data warehouses or create cubes, aggregation tables, or business intelligence extracts, providing flexibility and control for data architects, and self-service for data consumers.

0 comments:

Post a Comment