One of the greatest challenges for innovative organizations wanting to stay on the leading edge is, frankly put, to get out of their own way.

Consider the Wellcome Sanger Institute, a British genomics and genetics research organization. The Sanger Institute assists companies leading scientific discovery and innovation around the world by providing reliable and performant access to biomedical research data.

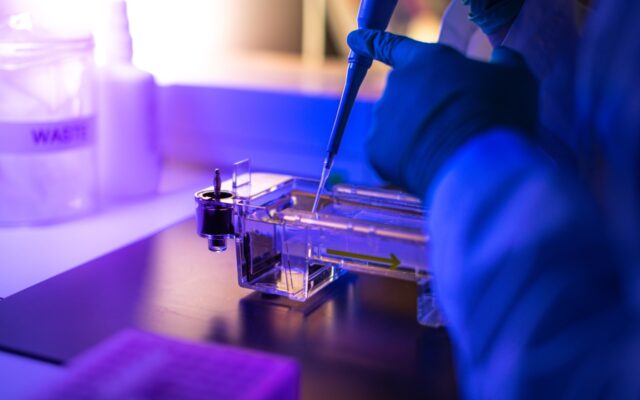

Biomedical research data is some of the most complex data on the planet. For example, sequencing human DNA requires tracking more than 6.4 billion base pairs. The human genome was first sequenced in 2003. Sequencing the genome was just the tip of the iceberg as numerous discoveries made possible by genomics research have fundamentally changed the quality of life across the planet. A method of sequencing known as next-generation sequencing (NGS), enables new research and applications that have never before been possible. Researchers are able to accelerate drug discoveries, as we have recently seen with COVID-19 vaccine research. In the long term, clinical genomics will become more common place enabling the practice of precision medicine.

Gene sequencing and other biomedical research is a large-scale processing challenge. The problem is that many organizations continue to implement technology and architectures that they are familiar with. To stay at the leading edge, however, requires letting go of “the way things are done.” By its definition, innovation means thinking in new ways that supersede existing models and paradigms. In short, next-generation applications need next-generation technology.

And that’s what Sanger delivers to its partners.

The Problem of Large-Scale, Unstructured Data

Gene sequencing is similar to other applications that need to process large-scale, unstructured data. With sequencing, a common workflow is the analysis and identification of variants associated with a specific trait or population. Captured data is encoded into a FASTQ file. The FASTQ format was originally developed at the Sanger Institute and has since become the de facto standard for storing the output of high-throughput sequencing systems such as the Illumina Genome Analyzer.

A large-scale, unstructured data application like gene sequencing analyzes data in several stages. At each stage, data needs to be mapped and aligned so the varying workflows can access the data quickly and efficiently.

Secondary analysis is then performed for mapping, alignment, and variant calling. In the tertiary analysis, researchers then directly apply biological, clinical, and lab data to stored genome sequencing data.

The overall challenge researchers face when working with large-scale, unstructured data is enabling rapid analysis at all stages of the process. Three common ways to improve analysis speed are to add more processing cores, rewrite analysis software to be more efficient, and utilize hardware acceleration. The Sanger Institute implemented cloud and on-premise GPU-based hardware accelerators for higher performance computing during initial analysis of genome data while the implementation of high performance computing clusters sped operations for running the entire analysis chain.

Core to being able to deliver rapid analysis is the fast and efficient management of petabytes of data in a cost-effective manner. The data life cycle for sequencing is to generate the data, analyze it, and then archive it. For the highest efficiency, managing the data life cycle needs to be as seamless and hands-off as possible.

Unstructured Data Solutions Powered by High-performance, Scale-Out Storage

To address varying storage performance requirements, Dell Technologies offers All-Flash arrays like the

Dell EMC PowerScale F600 and F200 All-Flash systems for fast access and high reliability. This is while hybrid arrays like Dell EMC Isilon H4500/H500/H5600/H600 and A200/A2000 are typically utilized as cost-effective storage and archiving. These devices offer performance, scalability, and high throughput, so they are never a bottleneck in a sequencing environment. PowerScale also features innovative OneFS technology, a software-defined architecture that enhances development agility, provides use case flexibility, and accelerates innovation.

With PowerScale, the Wellcome Sanger Institute has been able to scale and upgrade its storage without sacrificing simplicity. The migration-free design of PowerScale with its auto-discover capabilities means new nodes can be added in literally a minute while legacy nodes can be decommissioned with no downtime. Auto balancing ensures that as storage scales out, there are no “hot spots” that can create processing constriction. In this way, the Sanger Institute is able to introduce next-generation storage technology as it becomes available. In turn, its partners can immediately leverage higher levels of efficiency in their research.

PowerScale’s native support of the S3 protocol greatly simplifies management of petabytes of data. Sanger can access cluster file-based data as objects so that traditional NAS and emerging object storage can be used together transparently. The result is a cost-effective unstructured data storage solution with enhanced data-lake capabilities that enable file and object access on the same platform. Features include bucket and object operations, security implementations, and a single integrated management interface. In this way, all data can be simultaneously read and written through any protocol. Efficiency is improved through inline data reduction capabilities, as well as by eliminating the need to migrate and copy data to/from a secondary source.

There are many other benefits for the Wellcome Sanger Institute beyond higher performance and simplified data management. For example, flexible file and object access, combined with a software-defined architecture, means that data is available everywhere it needs to be in the analysis chain, extending from the edge to the cloud. Storage is also DevOps ready, meaning developers can utilize new Ansible and Kubernetes integrations when they are available. PowerScale is resilient as well: A system can sustain multiple node failures without disrupting operations, thus eliminating downtime.

Continuous Improvement

Especially important for driving forward efficiency is the ability of PowerScale to provide intelligent insights into data, infrastructure, and operations. Dell Technology’s CloudIQ combines machine intelligence and human intelligence to give administrators the insights they need to be able to efficiently manage their Dell EMC infrastructure. In addition, CloudIQ provides alerts to potential issues so IT can take quick action and minimize any disruption to service.

DataIQ is another important technology for maximizing efficiency when working with large-scale, unstructured data. Improving efficiency is an ongoing process. By providing a unified file system view of PowerScale, ECS, third-party platforms, and the cloud, DataIQ makes it possible to draw unique insights into how data is used and overall storage system health. DataIQ also empowers users to identity, classify, and move data between heterogeneous storage systems and the cloud on demand.

The Wellcome Sanger Institute is helping to change the world. And they’ve achieved this by changing how they manage their data. PowerScale has enabled Sanger Institute to unlock the potential within their partners’ research. True multiprotocol support allows them to access any data, anywhere from the edge to the cloud. Simple non-disruptive scaling allows them to optimize for ever-changing efficiency, bandwidth, and capacity needs. And intelligent insights into infrastructure and data enable them to continuously improve their operations.

Source: delltechnologies.com

0 comments:

Post a Comment