Dell EMC PowerEdge R7525 and AMD Instinct MI100

The drive for advancing research in high performance computing (HPC) and artificial intelligence (AI) has sped the demand for computation at an exponential rate. Performance of HPC systems is more than doubling every two years while performance required to train AI models is doubling every 3-4 months

In addition to the advancement of processor technology, architectural enhancements and compute accelerators are becoming the norm to meet the growing computational demands of HPC and AI. Specialized architectures and accelerators can help speed up application-specific core arithmetic operations such as floating-point multiply add and accumulate, vector and matrix operations that take up much of the execution cycles for HPC and AI workloads.

Dell EMC PowerEdge R7525

.Several industries need these advanced HPC capabilities. Data analytics, such as Reverse Time Migration used in the Oil and Gas industry, require accelerated computing to solve the massive IOPS challenges. Private and government research companies require dense compute and GPU capabilities to run complex simulations that must resolve the complications of chaos theory. Academia leverages GPUs to accelerate virus simulations, genomics research, and quantum physics workloads. Modern GPUs are extending their capabilities to efficiently run as a compute co-processor for HPC and AI workloads with inclusion of hardware support for a variety of numerical precision and increased memory bandwidth. The AMD Instinct MI100 is the latest entry into the compute accelerator arena delivering compelling performance coupled with a flexible and open software ecosystem.

Dell has teamed with AMD to bring you the PowerEdge R7525 with AMD Instinct MI100. This combination enables faster scientific discoveries by speeding up simulations and time to insight, using complex deep learning models for your most compute intensive use cases. The AMD Instinct MI100 uses the new AMD CDNA (Compute DNA) with all-new Matrix Core Technology to deliver a nearly 7x (FP16) performance boost for AI workloads vs AMD prior Gen. Scientific applications will benefit from MI100’s single-precision FP32 Matrix Core performance for a nearly 3.5x boost for HPC & AI workloads vs AMD prior gen. Leading AI researchers can leverage MI100’s support for newer machine learning operations like bfloat16 to help reduce training time from weeks and days to hours on the PowerEdge R7525.

AMD Instinct MI100

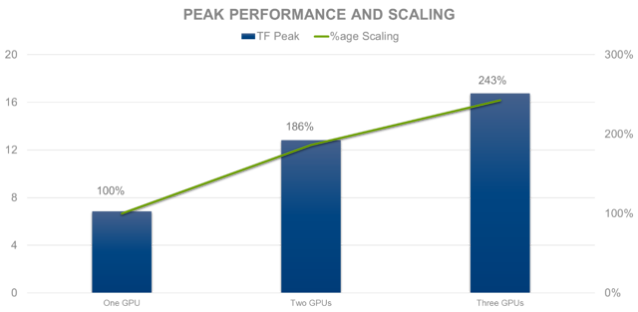

This collaboration brings amazing hardware together to increase the performance of your HPC workloads. The R7525 with MI100 leverages PCIe Gen4 capability, ideal for bandwidth intensive HPC applications where there is lot of data movement over PCIe bus. These performance boosts lead to faster solution times, more efficient resource utilization, and more seamless HPC scaling.

The MI100 brings more to the table than just 2x the amount of processing density compared to previous gen of AMD products, but it also complements the other components within the R7525. By itself, the MI100 delivers:

1. The world’s fastest HPC accelerator, with up to 11.5 TFLOPs peak double precision (FP64) performance

2. Nearly 3.5x (FP32) matrix performance for HPC and nearly 7x (FP16) performance boost for AI workloads vs AMD prior generations

The new AMD Infinity Architecture connects three MI100s inside the R7525over PCIe® Gen4. Higher bandwidth and lower latencies due to PCIe Gen4 over Gen3 improves the utilization of GPUs, making HPC workloads run more efficiently.

Further, AMD’s ROCm open software allows you to use different compute languages and move that across compute platforms. This is an open and portable ecosystem supporting multi-architectures, including GPUs from other vendors. AMD also added a tool called Hipify which enables code written in native CUDA to be easily converted to the AMD ROCm HIP programming model with minimal post tuning or optimization needed.

Source: delltechnologies.com

0 comments:

Post a Comment