Dell Technologies and NVIDIA have been helping our joint customers fast-track their Artificial Intelligence and Deep Learning initiatives. For those looking to leverage a pre-validated hardware and software stack for DL, we offer Dell EMC Ready Solutions for AI: Deep Learning with NVIDIA, which also feature Dell EMC Isilon All-Flash storage. For organizations that prefer to build their own solution, we offer the ultra-dense Dell EMC PowerEdge C-series, with NVIDIA V100 Tensor Core GPUs, which allows scale-out AI solutions from four up to hundreds of GPUs per cluster. We also offer the Dell EMC DSS 8440 server, which supports up to 10 NVIDIA V100 GPUs or 16 NVIDIA T4 Tensor Core GPUs. Our collaboration is built on the philosophy of offering flexibility and informed choice across a broad portfolio that combines the best GPU-accelerated compute, scale-out storage, and networking.

To give organizations even more flexibility in how they deploy AI from sandbox to production with breakthrough performance for large-scale AI, Dell Technologies and NVIDIA have recently collaborated on a new reference architecture for AI and DL workloads that combines the Dell EMC Isilon F800 all-flash scale-out NAS, Dell EMC PowerSwitch S5232F-ON switches, and NVIDIA DGX-2 systems.

Key components of the reference architecture include:

◉ Dell EMC Isilon all-flash scale-out NAS storage delivers the scale (up to 58 PB), performance (up to 945 GB/s), and concurrency (up to millions of connections) to eliminate the storage I/O bottleneck keeping the most data-hungry compute layers fed to accelerate AI workloads at scale. A single Isilon cluster may contain an all-flash tier for high performance and an HDD tier for lower cost, and files can be automatically moved across tiers to optimize performance and costs throughout the AI development life cycle.

◉ The PowerSwitch S5232F-ON is a 1 RU switch with 32 QSFP28 ports that can provide 40 GbE and 100 GbE connectivity. This series supports RDMA over Converged Ethernet (RoCE), which allows a GPU to communicate with a NIC directly across the PCIe bus, without involving the CPU. Both RoCE v1 and v2 are supported.

◉ The NVIDIA DGX-2 system includes fully integrated hardware and software that is purpose-built for AI development and high-performance training at scale. Each DGX-2 system is powered by 16 NVIDIA V100 Tensor Core GPUs that are interconnected using NVIDIA NVSwitch technology, providing an ultra-high-bandwidth, low-latency fabric for inter-GPU communication.

To give organizations even more flexibility in how they deploy AI from sandbox to production with breakthrough performance for large-scale AI, Dell Technologies and NVIDIA have recently collaborated on a new reference architecture for AI and DL workloads that combines the Dell EMC Isilon F800 all-flash scale-out NAS, Dell EMC PowerSwitch S5232F-ON switches, and NVIDIA DGX-2 systems.

Key components of the reference architecture include:

◉ Dell EMC Isilon all-flash scale-out NAS storage delivers the scale (up to 58 PB), performance (up to 945 GB/s), and concurrency (up to millions of connections) to eliminate the storage I/O bottleneck keeping the most data-hungry compute layers fed to accelerate AI workloads at scale. A single Isilon cluster may contain an all-flash tier for high performance and an HDD tier for lower cost, and files can be automatically moved across tiers to optimize performance and costs throughout the AI development life cycle.

◉ The PowerSwitch S5232F-ON is a 1 RU switch with 32 QSFP28 ports that can provide 40 GbE and 100 GbE connectivity. This series supports RDMA over Converged Ethernet (RoCE), which allows a GPU to communicate with a NIC directly across the PCIe bus, without involving the CPU. Both RoCE v1 and v2 are supported.

◉ The NVIDIA DGX-2 system includes fully integrated hardware and software that is purpose-built for AI development and high-performance training at scale. Each DGX-2 system is powered by 16 NVIDIA V100 Tensor Core GPUs that are interconnected using NVIDIA NVSwitch technology, providing an ultra-high-bandwidth, low-latency fabric for inter-GPU communication.

Benchmark Methodology

To validate the new reference architecture, we ran industry-standard image classification benchmarks using a 22 TB dataset to simulate real-world training workloads. We used three DGX-2 systems (48 GPUs total) and eight Isilon F800 nodes connected through a pair of PowerSwitch S5232F-ON switches. Various benchmarks from the TensorFlow Benchmarks repository were executed. This suite of benchmarks performs training of an image classification convolutional neural network (CNN) on labeled images. Essentially, the system learns whether an image contains a cat, dog, car, train, etc. The well-known ILSVRC2012 image dataset (often referred to as ImageNet) was used. This dataset contains around 1.3 million training images in 148 GB. This dataset is commonly used by DL researchers for benchmarking and comparison studies. To approximate the performance of this reference architecture for datasets much larger than 148 GB, the dataset was duplicated 150 times, creating a 22 TB dataset.

To determine whether the network or storage impact the performance, we ran identical benchmarks on the original 148 GB dataset. After the first epoch, the entire dataset was cached in the DGX-2 system and subsequent runs had zero storage I/O. These results are labeled Linux Cache in the next section.

Benchmark Results

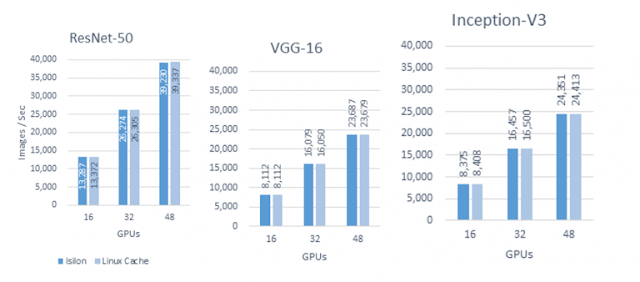

There are a few conclusions that we can make from the benchmark results shown in the figure below.

◉ Image throughput and therefore storage throughput scale linearly from 16 to 48 GPUs.

◉ There is no significant difference in image throughput when the data comes from Isilon instead of Linux cache.

In the following figure, system metrics captured during three runs of ResNet-50 training on 48 GPUs are shown. There are a few conclusions that we can make from the GPU and CPU metrics.

◉ Each GPU had 97% utilization or higher. This indicates that the GPUs were fully utilized.

◉ The maximum CPU core utilization on the DGX-2 system was 70%. This occurred with ResNet-50.

The next figure shows the network metrics during the same ResNet-50 training on 48 GPUs. The total storage throughput was 4,501 MB/sec.

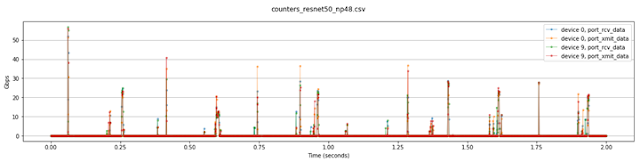

Based on the 15 second average network utilization for the RoCE network links, it appears that the links were using less than 80 MB/sec (640 Mbps) during ResNet-50. However, this is extremely misleading. We measured the network utilization with millisecond precision and plotted it in the figure below. This shows periodic spikes of up to 60 Gbps per link per direction. For VGG-16, we measured peaks of 80 Gbps (not shown).

TensorFlow Storage Benchmark

To understand the limits of Isilon when used with TensorFlow, a TensorFlow application was created (TensorFlow Storage Benchmark) that only reads the TFRecord files (the same ones that were used for training). No preprocessing nor GPU computation is performed. The only work performed is counting the number of bytes in each TFRecord. This application also has the option to synchronize all readers after each batch of records, forcing them to go at the same speed. This option was enabled to better simulate a DL or ML training workload. The result of this benchmark is shown below.

With this storage-only workload, the maximum read rate obtained from the eight Isilon nodes was 24,772 MB/sec. As Isilon has been demonstrated to scale to 252 nodes, additional throughput can be obtained simply by adding Isilon nodes.

0 comments:

Post a Comment