What is deep learning?

The early stages of World War II brought about many challenges. Aerial warfare left the historically safe areas vulnerable to attacks from the air. Building a bigger wall or using the ocean as a barrier strategy was quickly deemed useless. In Thomas Rid’s Rise of the Machine: A History of Cybernetics he walks through how learning machines were born out the necessity to create capable anti-aircraft machines. By combining man and machine the anti-aircraft guns were more dynamic at repealing aerial attacks thus the dawn of man and machine began. Over the years those learning machines have advanced from simple pattern recognition to neural networks allowing machines to self-drive automobiles, drones, and trains.

Many of the advances in learning machines has been made possib le by Deep Learning. Think of Deep Learning as a subset in Machine Learning where algorithms learn much like the human brain. In Machine Learning, algorithms are programmed with a defined set of features. For example, trying to identify car types with Machine Learning require to predefined features like size, spoiler, wheelbase, etc. However Deep Learning allows for the Neural Network to define the features from the data feed through the input layer.

Why Deep Learning Now?

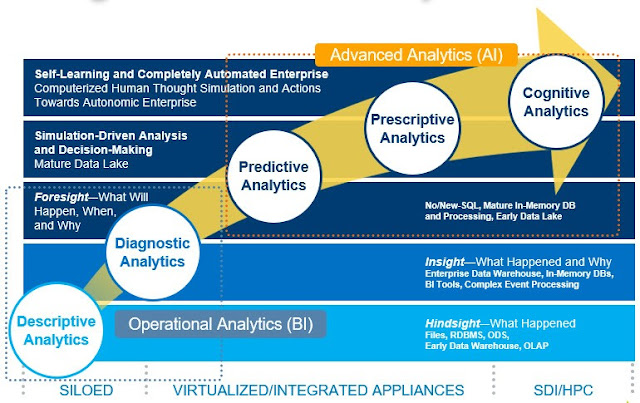

The mathematics and research for Deep Learning may have been around for decades but only recently has the true potential begun to be realized. The key to the rise of Deep Learning is in the Data. Stanford Professor and one of the foremost experts on Artificial Intelligence and Deep Learning Andrew Ng attributes the rise of deep learning to improved algorithms and rise in data. As Data Scientist and Machine Learning Engineers use better algorithms and larger data sets the accuracy of the models improve. Insights gleaned from Deep Learning still fall in the Big Data Maturity Model but are of a higher order of maturity. Reporting like that seen with Descriptive Analytics don’t require complex deep learning algorithms or large varied data sets. However, as businesses move up the maturity model, like teaching an automobile to drive itself through the busy streets like Nashville or San Francisco, they require more complex algorithms, varying data types, and vast amounts of data.

Data is Key to Better Models

The improved accuracy shows Data Scientist Teams that the key to creating the best model is using better algorithms and neural networks, but the technology is only part of the equation. In fact, I would argue the neural networks are the easy part. Look at the biggest implementors of Deep Learning like Google & Facebook. Both have been driving the open-source versions of their Deep Learning solutions to the public in the form of Tensorflow & Caffe. Why would Facebook risk doing that? Couldn’t a rival or startup build models to predict what ads our friends and families maybe likely to click on? The problem for that challenger is they couldn’t come close to the accuracy because they wouldn’t have anything close to the Data Capital Facebook has. Data is the real differentiator not the algorithms which is why companies open-source their deep learning frameworks.

Ready for Data Capital

Data Capital has moved beyond a theory and it seen as a law for all industries disrupting the market. The data businesses hold is their accelerant to innovation and will decide who the winners and loser of tomorrow. Data is the great equalizer in deciding if the next application or project your business undertakes will be successful. No longer do we have to rely on gut decisions hoping we made the right call. We can now reduce our risk by letting the data tell us what to do. Now the challenge lies in how we are collecting, protecting, cleansing, and giving access to our data. Tomorrow’s disruptors understand the real risk is not having a strategy for managing Unstructured Data at Scale.

0 comments:

Post a Comment