The increased digitization of goods and services has positioned PCs as the lifeblood of every modern business. And keeping pace with the latest updates to BIOS, drivers, Windows operating systems and applications is putting a strain on IT operations. Some factors at play include:

- Return to office. While hybrid workforces are here to stay, a recent IDC study shows many workers (78%) have already returned to the office permanently. Those devices that were deployed when workers went remote in 2020 are nearing their end-of-life date.

- AI. IDC forecasts artificial intelligence PCs to account for nearly 60% of all PC shipments by 2027. As you refresh your PC fleet, you will want to incorporate NPU-enabled configurations for the use cases that benefit from enhanced local AI.

- Windows OS transition. Windows 10 will go out of support on October 14, 2025, and the current version (22H2) will be the last version of Windows 10.

When I talk to customers about device refresh, I often hear they are burdened with bubble-like refresh events that are disruptive, cost more than they should, require IT to stop focusing on business-led initiatives and often result in a negative end user refresh experience. And with today’s hybrid environment, managing a decentralized workforce makes the refresh process even more complex, costly and disruptive.

Organizations struggling with these challenges should consider partnering with a global leader in PC refresh management to design and run an effective refresh program that encompasses:

- Automation of the entire refresh process, from system build to end user communication.

- Optimization of IT resources and a predictable refresh budget.

- A non-disruptive refresh experience for end users.

Bringing Together Dell’s Award-winning Hardware With its Hardware Lifecycle Management Expertise

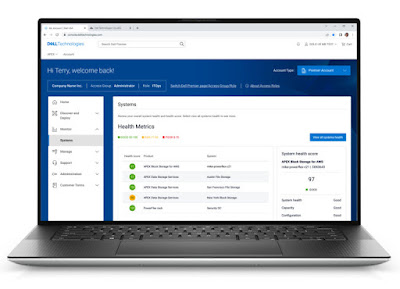

Dell Managed Services for device refresh delivers a strategic PC refresh program that includes roll-out planning, procurement forecasting, end user communication and deployment automation.

Device refresh should not be disruptive to your business. Dell Technologies expertise brings planning tools and patented intellectual property to automate the refresh process. From strategic timing to efficient execution, you can hold Dell accountable to deliver exceptional refresh outcomes.

You can also rely on Dell for demand forecasting and control of end user device options to maintain clear refresh cost visibility over time. This enables a seamless supply chain, with timely and targeted availability, to keep your fleet refresh on track and in budget—all at a predictable cost per device.

Dell manages interactions and communications with end users throughout the refresh process, from request to deployment, inclusive of escalations and incident resolution. Our commitment ensures the refresh experience for your employees is productive and easy. For end users, getting a new PC should be like receiving a great gift— fun and exciting.

Managing the Device Lifecycle Beyond Day One

Once PCs are deployed and your end users are happy, there will be continued events that will occur over the span of the PC lifecycle where Dell can bring tremendous value. The Dell Lifecycle Hub ships new hire kits the same day or next business day of their request, facilitates whole unit exchange and can reclaim and recycle devices with NIST-level data wiping either onsite or remotely. Lifecycle Hub ensures your devices have more than one life by cascading reclaimed devices to end users in your organization. It also allows organizations to trade in their devices to get credits for the purchase of new Dell devices.

Time to Focus on Business-led IT Initiatives

As you consider your PC refresh needs and PC hardware lifecycle management needs, consider Dell Managed Services. Shift tedious and time-consuming work to us so you can focus on your business-led IT initiatives.

Our PC hardware lifecycle management expertise spans the world, entails patented intellectual property and has been utilized by hundreds of customers for millions of device refreshes. Reach out to your Dell account representative today to learn more about how Dell can help you manage your upcoming PC refresh and your PC hardware lifecycle.

Source: dell.com