Sunday, 27 November 2022

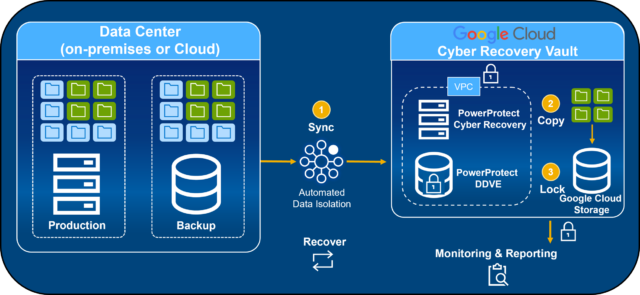

Secure, Isolate and Recover Critical Data with Google Cloud

Saturday, 26 November 2022

Demystifying Observability in the SRE Process

Brainstorm with Subject Matter Experts

Set Up KPIs and Scoring

Putting Everything Together

Thursday, 24 November 2022

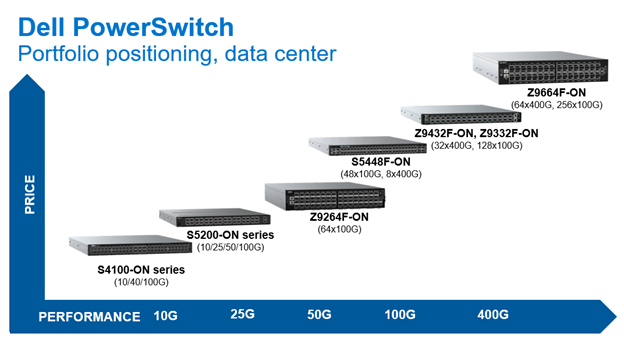

64 x 400GbE: A Faster, Greener Data Center

Tuesday, 22 November 2022

Bringing Dell Data Services and Software Innovations to AWS

Delivering Enterprise Storage Capabilities to AWS

Enhancing Cyber Resiliency and Modern Data Protection in AWS

Bringing Amazon EKS Anywhere On-premises

Building a Cloud Strategy That Works for You with Dell Services

Spend Time with Us at AWS re:Invent

Sunday, 20 November 2022

Managing Your Organization’s Digital Transformation Costs with Dell APEX

Why Multicloud Matters

Multicloud Benefits and Challenges

IaaS is an Answer

Saturday, 19 November 2022

Enabling Open Embedded Systems Management on PowerEdge Servers

Open-source Software with OpenBMC

Open Ecosystem Embedded Software = Dell Open Server Manager

Security, Support and Manageability

Friday, 18 November 2022

Coming Soon: Backup Target from Dell APEX

Thursday, 17 November 2022

Build the Private 5G Network of Your Dreams

Time for a Renovation

Reconstruct Your Connectivity Solutions

Call In the Experts

Tuesday, 15 November 2022

Shift into High Gear with High Performance Computing

Today’s racing teams need to extract every bit of performance out of their cars and technical teams to succeed in such a competitive sport. They rely on high performance computing to not only design their cars and engines but to analyze race telemetry and make timely decisions that get them to the finish line first.

High Performance Computing also provides data-driven insights in other industries, leading to significant innovation. The power of HPC to discover is increasing the pressure on organizations to deploy more HPC workloads faster. Additionally, new capabilities in artificial intelligence and machine learning are expanding the scope and complexity of HPC workloads.

The implementation and ongoing management of HPC is complex and not suited for many IT organizations. The primary obstacles are financial limitations, insufficient in-house HPC expertise and concerns about keeping data secure. Dell APEX High Performance Computing helps solve these issues so you too can shift your HPC workloads into high gear.

Start in the Pole Position

A managed HPC platform that is ready for you to run your workloads is the best starting point. Dell APEX High Performance Computing provides:

◉ All you need to run your workloads: including hardware and HPC management software consisting of NVIDIA Bright Cluster Manager, Kubernetes or Apptainer/Singularity container orchestration and a SLURM® job scheduler.

◉ Managed HPC platform: eliminate the internal time and specialized skills required to design, procure, deploy and maintain HPC infrastructure and management software, so you can focus your resources on your HPC workloads.

◉ Convenient monthly payment: skip upfront HPC capital expenditures with a 1-, 3- or 5- year subscription that can be applied as an operating expense.

◉ Easy to order: choose between validated designs for Life Sciences or Digital Manufacturing while at the same time having flexibility on basic requirements, like capacity, processor speed, memory, GPU’s, networking and containers.

◉ Flexible capacity: additional compute power and storage that Dell makes available beyond your committed capacity so you can scale resources to meet periods of peak demand.

◉ Customer Success Manager: your main point of contact and a trusted advisor throughout your Dell APEX High Performance Computing journey.

Rely on a Skilled Pit Crew

A good race team doesn’t need a pit crew for just speedy fill-ups and tire changes, they are essential to the ongoing maintenance and fine-tuning of the race car. With APEX High Performance Computing, a Customer Success Manager is your crew chief, coordinating Dell technicians in charge of platform installation and configuration, 24×7 HPC platform performance and capacity monitoring, ongoing software and hardware upgrades, 24×7 proactive solution-level hardware and software support and onsite data sanitization with certification.

Safety First

In racing, it is imperative to implement the features that will keep the drivers safe in their seat. With Dell APEX High Performance Computing, we deploy your HPC solution securely in your datacenter. The infrastructure is dedicated to your organization, and not shared with other tenants through partitioning. This is ideal if you process sensitive or proprietary data that you need to keep safe, as follows.

◉ Keep your data close: minimize data latency and keep it secure in your datacenter, avoiding the need to migrate large amounts of sensitive data to the public cloud

◉ Lifecycle management: one of the most important features of our managed service is the regular updating of the system and HPC management software codes to ensure the most recent security patches are in place

Get to the Winner’s Podium

Our solutions are based on Dell Validated Designs with state-of-the-art hardware optimized for Life Sciences and Digital Manufacturing workloads. But even the fastest race cars require ongoing maintenance to stay in the race. Dell APEX High Performance Computing includes ongoing system monitoring, tuning and support and regular updating of hardware and software to ensure your workloads run on reliable, optimized systems.

Strong Partnerships Win Races

Many industries count on Dell to help them steer through the complexities of High Performance Computing, including Formula 1 racing teams. You too can count on Dell to manage your HPC infrastructure, backed by our deep global IT expertise, so you can focus on what you do best: discover and innovate.

Source: dell.com