This is a continuation of a series of blogs about how Dell IT is Cracking the Code for a World-class Developer Experience.

As Dell Digital, Dell’s IT organization, has continued our DevOps journey and embraced the Product Model approach, the role of our application developers has shifted significantly. Developers no longer hand off applications they create to someone else to operate, monitor and manage. They now own their applications top to bottom, throughout their lifecycle. To support this, Dell Digital provides developers with the data, tools and methodologies they need to help them better understand their applications.

Over the past two years, our Observability product team has been evolving our traditional application monitoring process into what we now call Application Intelligence, a much more extensive set of tools and processes to gather data and provide it to developers to analyze, track and manage the applications they build. It helps them gain insights about application performance, behavior, security and most importantly, user experience. It is data that developers had limited access to before, since they had no reason to monitor the operations of their applications in the old operating model.

As developers began to use more data to evolve their DevOps operating model, a key challenge we had to overcome was the high level of industry fragmentation. Multiple and overlapping tools were forcing developers to change context frequently, ultimately impacting productivity. To reduce fragmentation, we have created a simplified and streamlined experience for developers to easily access application intelligence via automated processes in our DevOps pipeline, as well as self-service capabilities for them to instrument their own applications.

By alleviating the friction previously caused by using multiple tools, different navigation flows and different data and organizational structures, we are enabling developers to spend less time trying to find where performance issues are and more time on adding value.

Making a Cultural Change

Transforming our observability portfolio to meet developers’ needs required both a cultural and technical strategy.

We started with the cultural change, educating developers on where they could find the data they need to accommodate the fact they now own their apps end-to-end. Under the product model, where our IT software and services are organized as products defined by the business problems they solve, developers are part of product teams responsible for their solutions and services from the time they are built throughout their entire lifecycle. Giving them the data to better understand their products helps developers deliver higher-quality code in less time to meet the rapid delivery that the product model demands.

That was the first step to opening doors for developers with access to data that was highly restricted before. My team always had extensive observability data but fiercely controlled it because that was the operational process.

But now, we gave developers the freedom to see what was going on for themselves by ensuring the data was available for everyone in a shift to data socialization.

We spent a lot of time talking to developers about what they were looking for, what was missing and what technologies worked for them. We asked them things like, “when you wake up at three a.m. because someone called you with a priority one issue and it’s raining fire on your app, how do we make your life easier during that moment?”

Self-service was the first thing they asked for. They don’t want to have to call us anymore to get the information they need. So, in a crucial first step, we gave developers self-service use of the tools that they need to get their job done without asking us for permission.

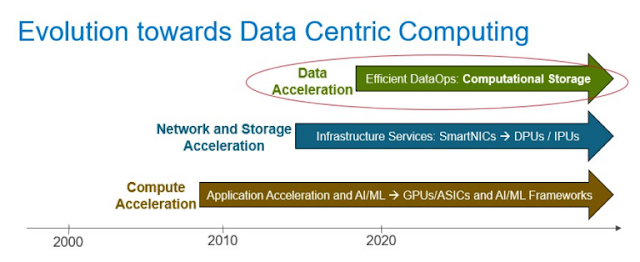

We also changed the focus of how we look at application data to meet developers’ needs by making the application the center of our observations. In our traditional monitoring process, our focus was a bottoms-up view of the environment, which assumed if the infrastructure—the servers, CPUs and hard drives—was working, the app was working. However, that is not always the case.

Two years ago, we began a top-down data view, looking first at whether the app is working and drilling down to infrastructure functionality from there. The application is now the most important thing in our observability process.

Streamlining and Simplifying Tools

In the second year of our transformation, we focused on creating a centralized app intelligence platform, consolidating the number of tools we use and radically simplifying the application data process. We chose to utilize three major vendor tools that complement each other to stitch together the data collections and let users see what’s happening across the stack, from traditional to cloud.

One key tool we use offers out-of-the-box, real-time observability of all applications across our ecosystem in detail, including which apps are talking to each other. It automatically gets the data out of the applications for users. Developers just need to put a few lines of code in the DevOps pipeline for cloud native applications and it automatically maps everything out. Because it spans production and non-production environments, developers can even understand how well their applications are working while they’re coding them.

By enabling access in the DevOps pipeline, developers get continuous feedback on every aspect of their application, including performance, how their customers are using their application, how fast the pages are loading, how their servers are working, which applications are talking to their applications and more.

With this tool, they can actually go top to bottom, on every problem that is happening in the environment, for any application.

This detailed platform works alongside a second tool that provides a longer-term executive, birds-eye view of the environment. This tool provides developers and our Site Reliability Engineering (SRE) teams the ability to customize their views to exactly match how the applications are architected. We also deliver real-time data to our business units enabling them to leverage the information that we regularly collect.

If a dashboard sends an alert about a problem, developers can use the more detailed tool to see exactly what the issue is. The solutions are used in combination to provide visibility and quick access to information from an ecosystem view all the way down to the transaction level.

The third tool we offer is an open-source-friendly stack that provides a virtualization layer that reads data from anywhere and provides users with sophisticated dashboard capabilities. Developers access this tool from Dell’s internal cloud portal rather than the DevOps pipeline, since it requires users to design how data is presented.

As we transitioned to our streamlined portfolio in the summer of 2021, we actually re-instrumented all the critical Dell systems in 13 weeks, with no production impact. The transition, which would normally have taken a year and a half, was completed just in time for Black Friday.

In addition to the efficiencies gained through automation and self-service access to these platforms, developers are also able to instrument their applications by themselves, giving them the freedom to define and implement what works best for their application.

Overall, we have given developers a toolbox they can use to get the best results in their specific roles in the DevOps process without having to get permission or wait for IT. We are also continuing to improve our offerings based on ongoing feedback from developers. Or like we always say: Build with developers for developers.

Keep up with our Dell Digital strategies and more at Dell Technologies: Our Digital Transformation.

Source: dell.com