The new NVIDIA Clara AI Toolkit enables developers to build and deploy medical imaging applications to create intelligent instruments and automated healthcare workflows.

In today’s hospitals, medical imaging technicians are racing to keep pace with workloads stemming from the growing use of CT scans, MRI scans and other imaging used in the diagnostic processes. In a large hospital system, a relatively small number of technicians might be hit with hundreds or even thousands of scans in a single day. To keep up with the volume, these overworked technicians need tools to assist with the process of analyzing complex images, identifying hard-to-detect abnormalities and ferreting out indicators of disease.

Increasingly, medical institutions are looking to artificial intelligence to address these needs. With deep-learning technologies, AI systems can now be trained to serve as digital assistants that take on some of the heavy lifting that comes with medical imaging workflows. This isn’t about using AI to replace trained professionals. It’s about using AI to streamline workflows, increase efficiency and help processionals identify the cases that require their immediate attention. Hospital IT needs to strategize to make their infrastructure AI-ready. NVIDIA and American College of Radiology have partnered to enable thousands of radiologists to create and use AI in their own facilities, with their own data, across a vast network of thousands of hospitals.

One of these AI-driven toolsets is NVIDIA Clara AI, an open, scalable computing platform that enables development of medical imaging applications for hybrid (embedded, on-premises or cloud) computing environments. With the capabilities of NVIDIA Clara AI, hospitals can create intelligent instruments and automated healthcare workflows.

The Clara AI Toolkit

To help organizations put Clara AI to work, NVIDIA offers the Clara Deploy SDK. This Helm-packaged software development kit encompasses a collection of NVIDIA GPU Cloud (NGC) containers that work together to provide an end-to-end medical image processing workflow in Kubernetes. NGC container images are optimized for NGC Ready GPU accelerated systems, such as Dell EMC PowerEdge C4140 and PowerEdge R740xd servers.

The Clara AI containers include GPU-accelerated libraries for computing, graphics and AI; example applications for image processing and rendering; and computational workflows for CT, MRI and ultrasound data. These features leverage Docker and Kubernetes to orchestrate medical image workflows and connect to PACS (picture archiving and communication systems) or scale medical instrument applications.

Fig 1. Clara AI Toolkit architecture

The Clara AI Toolkit lowers the barriers to adopting AI in medical-imaging workflows. The Clara AI Deploy SDK includes:

◈ DICOM adapter data ingestion interface to communicate with a hospital PACs system

◈ Core services for orchestrating and managing resources for workflow deployment and development

◈ Reference AI applications that can be used as-is with user defined data or can be modified with user-defined-AI algorithms

◈ Visualization capabilities to monitor progress and view results

Server Platforms for the Toolkit

For organizations looking to capitalize on NVIDIA Clara AI, Dell EMC provides two robust, GPU-accelerated server platforms that support the Clara AI Toolkit.

The PowerEdge R740xd server delivers a balance of storage scalability and performance. With support for NVMe drives and NVIDIA GPUs, this 2U two-socket platform is ready for the demands of Clara AI and medical imaging workloads. The PowerEdge C4140 server, in turn, is an accelerator-optimized, 1U rack server designed for most demanding workloads. With support for four GPUs, this ultra-dense two-socket server is built for the challenges of cognitive workloads, including AI, machine learning and deep learning.

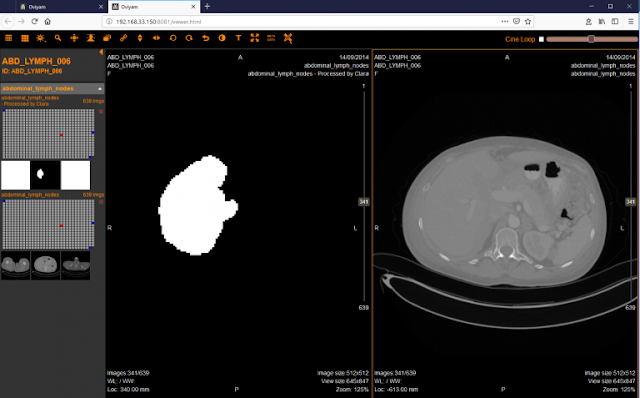

In the HPC and AI Innovation Lab at Dell EMC, we used Clara AI Toolkit with CT Organ Segmentation and CT Liver Segmentation on our GPU-accelerated servers running Red Hat Enterprise Linux. For these tests, we collected abdominal CT scan data, a series of 2D medical images, from the NIH Cancer Image Archive. We used the tools in the Clara AI Toolkit to execute a workflow that first converts the DICOM series for ingestion and identifies individual organs from the CT scan (organ segmentation).

Next, the workflow can use those segmented organs as input to identify any abnormalities. Once the analysis is complete, the system creates a MetaIO annotated 3D volume render that can be viewed in the Clara Render Server, and DICOM files that can be compared side by side with medical image viewers such as ORTHANC or Oviyam2.

Fig 2: Oviyam2 Viewer demonstrating side by side view of Clara AI Processed vs Original CT Scan

Clara AI on the Job

While Clara AI is a relatively new offering, the platform is already in use in some major medical institutions, including Ohio State University, the National Institutes of Health and the University of California, San Francisco, according to NVIDIA.

The National Institutes of Health Clinical Center and NVIDIA scientists used Clara AI to develop a domain generalization method for the segmentation of the prostate from surrounding tissue on MRI. An NVIDIA blog notes that the localized model “achieved performance similar to that of a radiologist and outperformed other state-of-the-art algorithms that were trained and evaluated on data from the same domain.”

As these early adopters are showing, NVIDIA Clara AI is a platform that can provide value to organizations looking to capitalize on AI to enable large-scale deep learning for medical imaging.

Fig 3: Ailments on segmented liver identified by Clara AI Toolkit